There is no hotter topic in the AI community these days than theDeepSeekIt's up, but there are frequent problems with its use. For example, the suspension of the API recharge on the official website. Just when I tried to use it, I encountered those 10 little words "Server is busy, please try later" that we never want to see again.

The AI giants that once fought separately are now embracing DeepSeek-R1, in addition to enterprises, institutions and universities are scrambling to access DeepSeek R1. It seems to have become the standard all of a sudden.

The question that arises is, how many versions of DeepSeek R1 are there? The web (e.g., "Taobao") is also starting to be flooded with local deployment tutorials, which can be very confusing.

Today, it's time to unravel the mysteries of the various versions of DeepSeek to help you get your head around it.

I. DeepSeek mainstream versions

What are the full-blooded, distilled, and quantized versions?

DeepSeek mainly has several versions, but also the most mainstream on the market. Convenient for everyone to understand, draw a table:

Appendix 1:DeepSeek's official own release https://huggingface.co/collections/deepseek-ai/deepseek-r1-678e1e131c0169c0bc89728d

Appendix 2:Provided by third parties (e.g. published by Ollama) https://ollama.com/

- Full-blooded version: the official release of DeepSeek. It refers to the full-parameter version with the largest number of parameters (671B or 671 billion parameters) and the strongest performance to handle complex inference and long context understanding tasks. The full-blooded version R1 is further trained based on DeepSeek-V3, which uses multi-stage reinforcement learning techniques to optimize inference capabilities without supervised fine-tuning (SFT). At Hugging Face, only DeepSeek-R1 is truly "full-blooded".

- Distillation version: officially released by DeepSeek. It is the version obtained by compressing the large model through the knowledge distillation technique, and the number of parameters is greatly reduced (1.5B-70B). On Hugging Face, those with Distill are "distilled" models. The underlying architecture of the distilled version is based on open source models such as Ali Qwen and Meta Llama, with higher hardware adaptability.

- Quantization: This is a way of compressing the size of the model by sacrificing some of its "accuracy", thus reducing the resources needed to run it and making it more efficient. This reduces the amount of resources needed to run the model and improves the efficiency of running the model, which is often the case with locally deployed models provided by third parties such as Ollama.

It is important to note that although the quantized version released by Ollama also has parameters of 671 billion, it is actually a "quantized" version with a different accuracy. The performance is not as good as the officially released full-blooded version.

Second, how to recognize the real and fake full-blooded version?

Analysis from the cost of supply: Now many products claim to have access to DeepSeek-R1, so how to determine whether it is a full-blooded version? In fact, the deployment of the "full-blooded version" has a higher cost. Generally speaking, the two models of 671B parameters are not for ordinary people, but for cloud computing providers or head Internet companies (after asking insiders, to deploy and run the real "full-blooded version", it needs about 2.5-3 million costs), which is not suitable for ordinary people. From this point of view, most of the big manufacturers should be accessed by the real "full-blooded version".

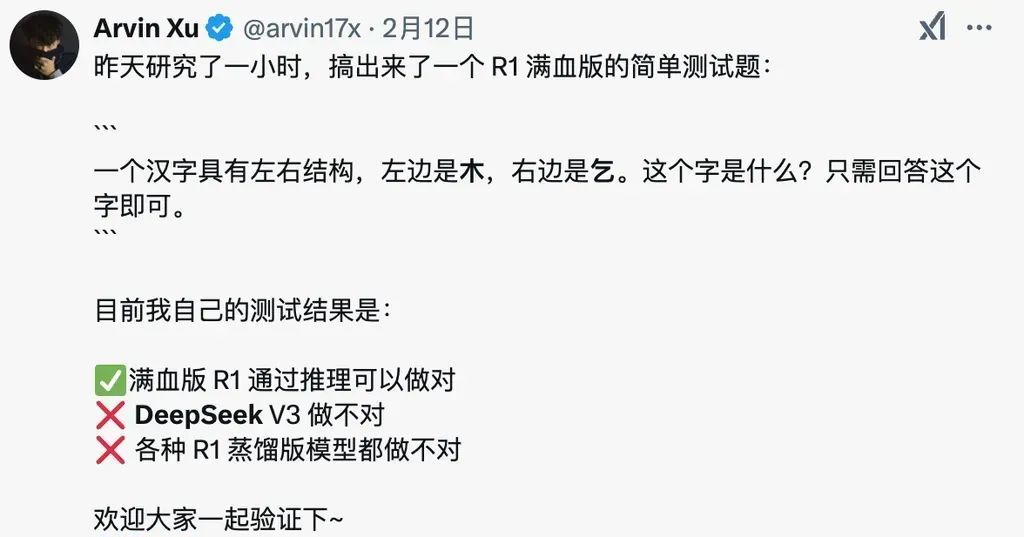

Internet users provide interesting tests: no strict scientific basis, but you can refer to try. Question: A Chinese character has a left-right structure with wood on the left and beg on the right. What is this character?

It is said that only the "full-blooded version" can answer quickly and correctly.

Third, where can I experience the "full-blooded" DeepSeek?

Previously introduced 2 alternatives (but not officially announced whether it is "full-blooded version"), but the shallow fall used, think the effect is not bad.DeepSeek always crashes? Here are 2 "temporary" alternatives".

At present, there are clearly announced their access to the "full-blooded version" of the application, listed some for your reference:

- WeChat Search (internal testing phase)

- Tencent Yuanbao

- DingTalk

- Secret Tower AI Search

- Baidu Search (still "hot", go experience it)

IV. Final words

Which version of DeepSeek you choose ultimately depends on your actual needs, hardware conditions and budget. For most common users, it may be more convenient to use the official website or online API directly. Local deployment is also an option to consider if you have special needs for data privacy or require customized development.