On May 28, the ants of ParadiseLarge Model(Ling) team today officiallyOpen SourcestandardizeMultimodal large model Ming-lite-omni.

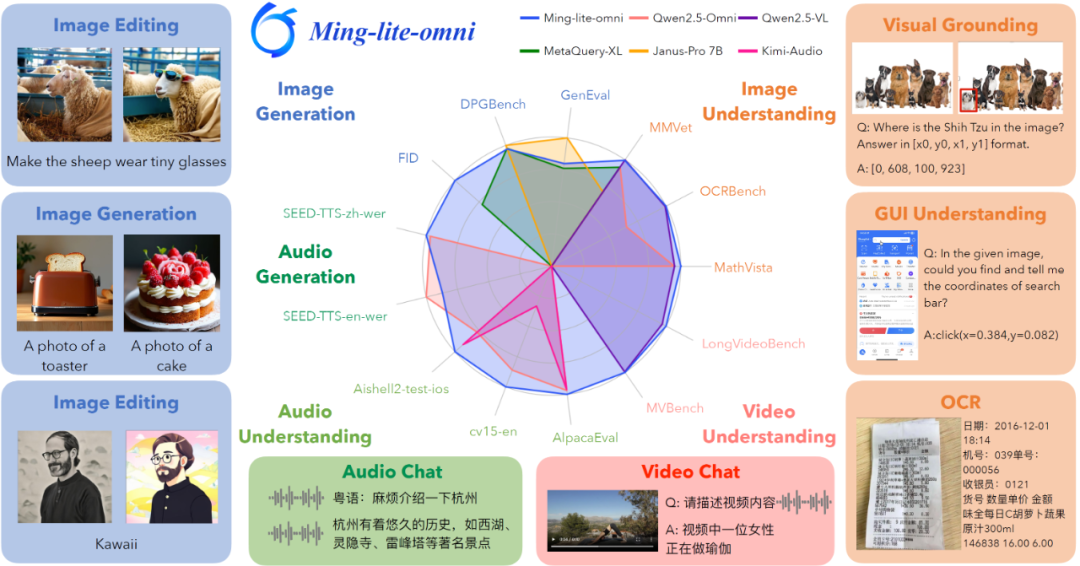

Ming-lite-omni is described as an all-modal model based on the MoE architecture constructed by Ling-lite, with a total parameter of 22B and an activation parameter of 3B. it supports "cross-modal fusion and unification" and "comprehension and generation unification".

Ming-lite-omni performs as well as or better than the 10B leading multimodal macromodels in several comprehension and generative tests, with only 3B parameter activations. Officially, this is the first open source model known to be able to match GPT-4o in terms of modal support.

In addition, the Ant-Bellion big model team will continue to optimize the effect of Ming-lite-omni on full-modal comprehension and generation tasks, and enhance the multimodal complex reasoning capability of Ming-lite-omni; it will also train a larger-size full-modal model, Ming-plus-omni, in order to further solve more highly specialized or domain-specific complex interaction problems.

Ming-lite-omni Current model weights and inference code is open source.

Github: https://github.com/inclusionAI/Ming/tree/main/Ming-omni

HuggingFace: https://huggingface.co/inclusionAI/Ming-Lite-Omni

Model Scope: https://modelscope.cn/models/inclusionAI/Ming-Lite-Omni

Project Page: https://lucaria-academy.github.io/Ming-Omni/