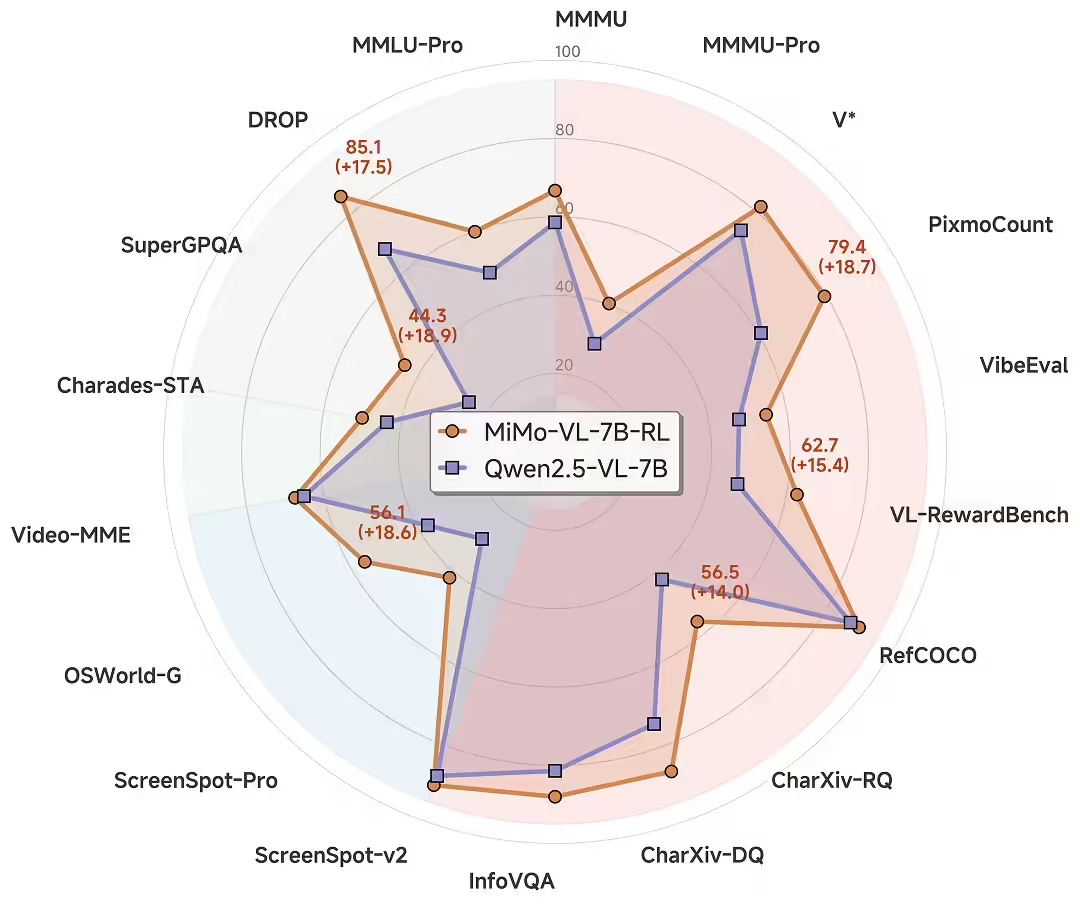

Xiaomi MiMo's official public post on May 30 announced that theMilletMultimodal large model Xiaomi (brand) MiMo-VL Now officiallyOpen Source. Officially, it is dramatically ahead of Qwen2.5-VL-7B, the benchmark multimodal model of the same size, in multiple tasks such as generalized Q&A and comprehensible reasoning for images, videos, and languages, and it compares favorably with dedicated models in GUI Grounding tasks for the Agent TimesandCome on.

MiMo-VL-7B maintains the text-only reasoning capability of MiMo-7B while dramatically outperforming the 10x parameter-sized Ari Qwen-2.5-VL-72B and the Ari QVQ-72B on multimodal inference tasks using only 7B parameter size in the Olympiad (OlympiadBench) and several math competitions (MathVision, MathVerse). QVQ-72B-Preview.Also beyond closed source models GPT-4o.

In the internal grand modeling arena of evaluating real user experiences, theMiMo-VL-7B Surpasses GPT-4o as #1 Open Source Model.

Its ability to perform tasks such as complex image reasoning and Q&A, the MiMo-VL-7B also shows good potential in GUI operations up to 10+ steps, and can even help you add the Xiaomi SU7 to your wishlist.

It uses high-quality pre-training data as well as innovativeHybrid Online Reinforcement Learning Algorithms(Mixed On-policy Reinforcement Learning, MORL):

- Multi-stage pre-training:

- We collect, clean and synthesize high quality pre-trained multimodal data, covering image-text pairs, video-text pairs, GUI operation sequences and other data types, totaling 2.4T tokens, and strengthen the ability of long-range multimodal inference by adjusting the proportion of different types of data in stages.

- Blended online intensive learning:

- Mixed text inference, multimodal perception + inference, RLHF and other feedback signals, and through online reinforcement learning algorithms to stabilize and accelerate the training, all-round enhancement of model inference, perception performance and user experience.

MiMo-VL-7B has been open source RL before and after the two models, IT home with open source links: https://huggingface.co/XiaomiMiMo and related technical reports: https://github.com/XiaomiMiMo/MiMo-VL/blob/main/MiMo-VL- Technical-Report.pdf

The MiMo-VL-7B framework for supporting 50+ quiz tasks has also been open-sourced to GitHub: https://github.com/XiaomiMiMo/lmms-eval