June 26, according to foreign media Ars Technica reported today that court documents made public on Monday local time disclosed that artificial intelligence company Anthropic Having spent millions of dollars.Disassembling physical books and scanning them into digital filesAI assistant for training AI assistants like ChatGPT. Claude. To obtain training data, the company dismantled and bound large numbers of books, scanned them into the system, and then simply discarded the originals.

The 32-page judgment discloses that Anthropic hired Tom Turvey in February 2024, who had been in charge of partnering for the Google Books program and whom the company had entrusted with the "Access to books from around the world". This strategic staffing arrangement is clearly intended to replicate Google's book digitization model that had been found to be fair use by the courts.

Ultimately, Judge William Alsup ruled that the scanning method constituted fair use on the grounds that the bookLegally purchased by Anthropic, scanned and destroyed immediately.The digital files were for internal use only and had not been disseminated. He argues that such conversions amount to "space-saving" digital conversions, which are characterized as "transformative" for the purposes of fair use. If companies had followed this path from the beginning, they might have set the first precedent for AI fair use, but early piracy undermined its legitimacy.

The core reason is actually quite simple: AI training requires massive amounts of high-quality text. To build big language models, researchers need toFeeding billions of words into a neural network, iteratively training the model to build relationships between words and concepts.

The quality of the training data directly affects the accuracy of the model output. Compared to cluttered information such as online reviews, edited books and articles can significantly improve the linguistic ability of AI.

AI companies are desperate to publish content, but are often reluctant to spend time negotiating licenses. The "first sale doctrine" in the United States provides legal leeway:After purchasing the physical book, the user can dispose of theThis makes the purchase of books a legitimate "bypass program". This makes the purchase of books a legitimate "detour program."

Like many of its peers, Anthropic initially chose to take a shortcut around copyright.1AI has learned from court materials that in order to bypass the lengthy and complicated licensing process, CEO AmodeiHas advocated the use of pirated e-books. But by 2024, the company began to seek safer alternatives due to legal considerations.

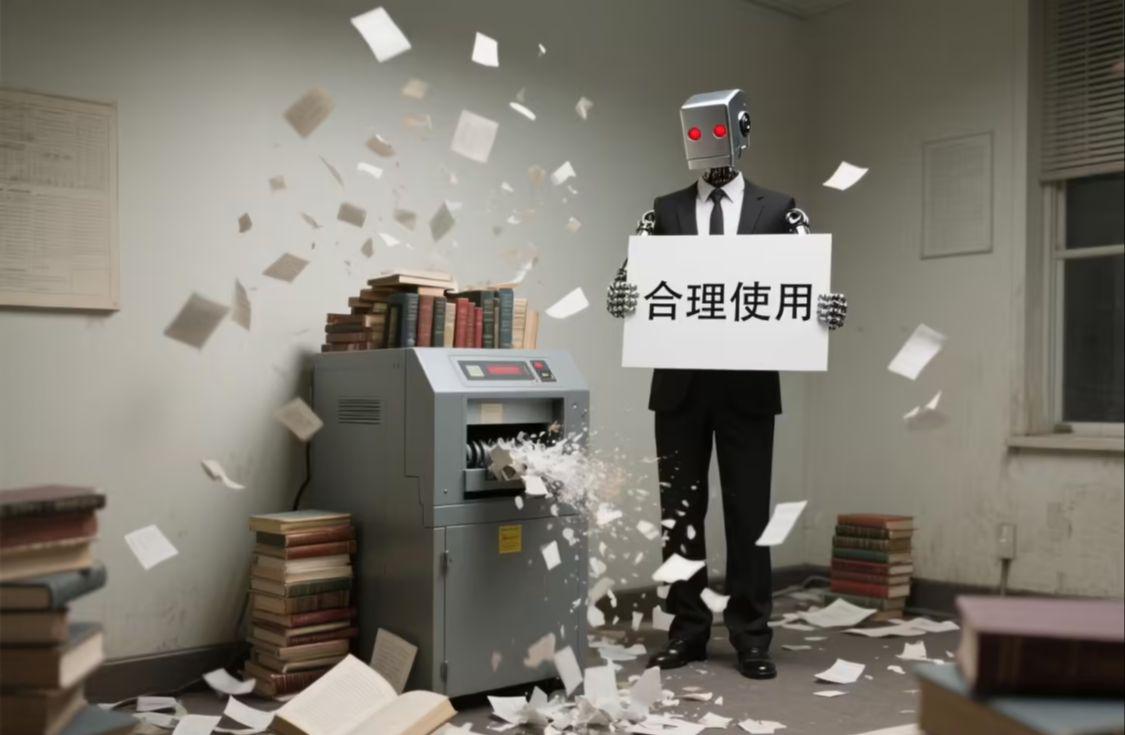

Acquiring used books is ideal: no need to negotiate licenses, and you get a quality training text. In order to speed up the digitization process, Anthropic uses "destructive scanning".Bulk purchase of books, unpacking, cutting, and scanning of entire lotMachine-readable PDF files, completed paperbacksAbandonment of all. The whole process costs millions of dollars.

Most of the company's purchases are of regular used books from retail outlets. But the fact is that non-destructive scanning technology is already mature. The Internet Archive, for example, has developed means of digitizing books that preserve their originality. Earlier this month, OpenAI and Microsoft also announced a partnership with Harvard University Libraries to train AI on nearly a million public-issue books that will be digitized while still being preserved.