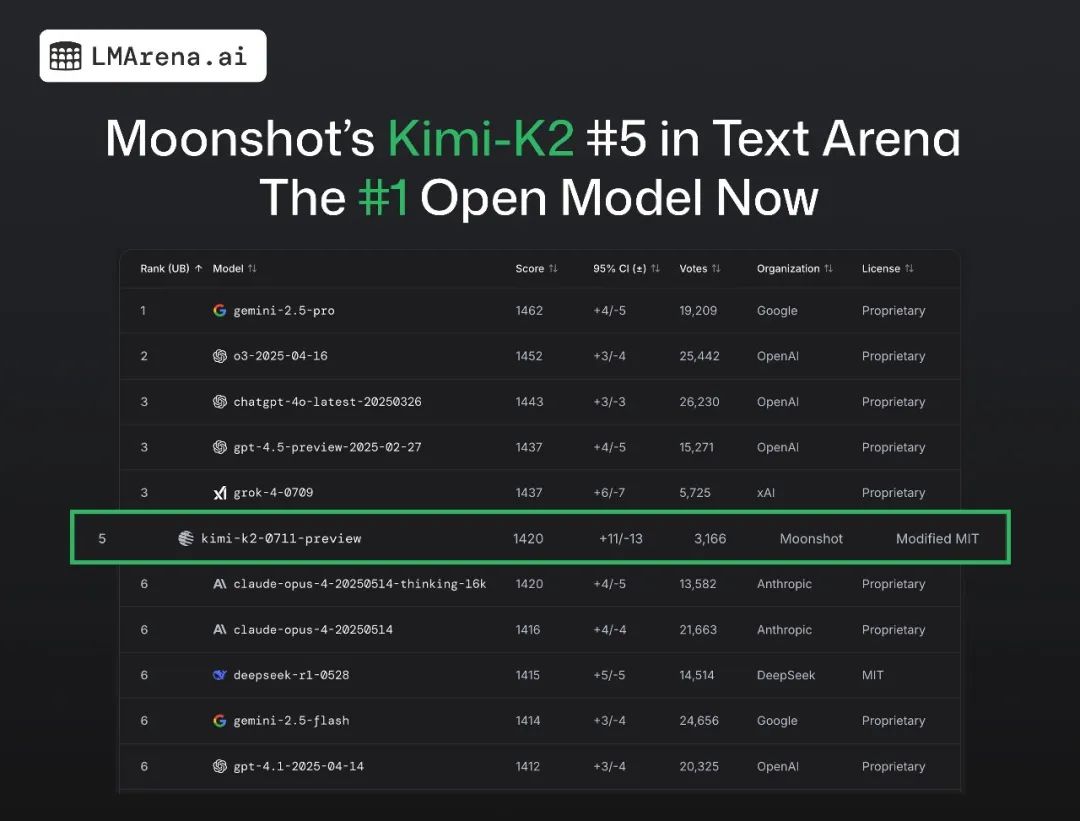

July 19, 2012 - LMArena, the authoritative big model ranking, has published its latest ranking results for the recently released Kimi K2. Beyond. DeepSeek R1, take it down.Open Source ModelFirst place.

LMArena stated thatThe Kimi K2 has earned the fifth position on the overall LMArena charts thanks to its performance and 3,000 community votes.

It is worth noting that Kimi K2 and DeepSeek R1 are the two Chinese models in the top 10 of the LMArena charts, but the number of Chinese models in the global top 20 extends to seven, with models such as the MiniMax M1 and the Qwen3-235b on the list.

Kimi-K2 was released and open-sourced last week, and it is called "MoE Architecture Base Model with Superb Code and Agent Capabilities". Officially, K2 has 1T total parameters, 32B activation parameters, 128k context length, and supports ToolCalls, networked search functions, and more.

It is reported that in benchmark performance tests such as SWE Bench Verified, Tau2, and AceBench, Kimi K2 has achieved SOTA scores in open source models, demonstrating its leading capabilities in code, Agent, and mathematical reasoning tasks.

In addition, Liu Shaowei, a member of the Kimi K2 development team, answered the question of "Kimi K2 adopts DeepSeek V3 architecture" in Zhihu recently. He said"It does inherit the structure of DeepSeek V3, but adjusts the structural parameters to fit Kimi's model.The V3 architecture is a very simple and easy to implement. And it revealed that the V3 architecture fit the cost budget associated with the development, so it chose to inherit the V3 architecture in its entirety.