-

OCR MODEL DECLARED OPEN SOURCE: PARAMETER 1B, MULTIPLE CORE CAPABILITIES SOTA

On November 25th, a new open source model, the HunyuanOCR, was announced today, with only 1B, based on a multi-modular structure of the hybrids, with many industry OCR application lists SOTA (note: state of the art). According to official sources, thanks to the conceptual design of the Magnificent Multi-Mode Model “end-to-end” philosophies, the functions of the HunyuanOCR can achieve optimal results only if it is based on a single forward reasoning. The OCR expert model is built on a hybrid multimodular structure and consists of three main components: native.. -

NING GWANG NAM: REPORT SHOWS THAT 80% U.S. AI INNOVATIVE ENTERPRISES USE THE CHINA OPEN SOURCE MODEL

15 November, at the & OpenEuler Summit General Assembly, Nii Guangnam, Member of the Chinese Engineering College. Ning Gwang-nam stated that the “Fifty-five” plan clearly proposes a high level of openness to the outside world and a new situation of cooperation for win. Our country will pursue a strategy of international scientific and technological cooperation that is more inclusive and mutually beneficial in its future development. Today, China is a strong advocate of open sources, the development of open sources and the promotion of open sources. He mentioned that some open-source communities led by Chinese companies had flourished internationally, during the AI era..- 1.8k

-

The Ultra-MoE-718B-V1.1 official open source and open for download and full disclosure of model weights and technical details

In October 17th, this September, the China 718B big model became the focus of the industry, with a training philosophy based on data-free, focused thinking, in the SuperCLUE list to the third open source model. China officially announced yesterday that open Pangu-Ultra-MoE-718B-V1.1 is officially open on the GitCode platform, with full disclosure of model weights and technical details. Hardware requirements: Atlas 800T A2 (64GB, > = 32 carats), support naked machines or Dock..- 1.1k

-

AI-generated games, Kunlun World Wide releases and open-sources Matrix-Game 2.0, Matrix-3D models

August 12, 2011 - Kunlun World Wide's SkyWork AI Technology Release Week was launched on August 11, with one model released every day for five consecutive days, covering the core multi-modal AI scenarios. Today, Kunlun brings the upgraded version of Matrix-Game interactive world model in Matrix series of self-developed world model - "Matrix-Game 2.0", which is claimed to be a world model that realizes interactive real-time long sequence generation in general scenarios. In order to promote the development of interactive world modeling, "Matrix-Game 2.0" is said to realize the interactive real-time long sequence generation world model in general scenarios.- 759

-

OpenAI launches two open source models gpt-oss-120b / 20b, performance close to o4-mini/o3-mini

August 6, 2011 - OpenAI announced the release of two open source models, GPT-oss-120b and GPT-oss-20b, the first open source language models to be released by OpenAI since the 2020 release of GPT-2, both of which can be downloaded for free from the Hugging Face online developer platform. Both models, which are available for free download on the Hugging Face online developer platform, have been described by OpenAI as performing "at the cutting edge" in a number of benchmarks used to compare open source models. According to OpenAI, the Gpt-oss-120b model has been shown to perform "at the cutting edge" in core reasoning...- 1.3k

-

OpenAI's First Open Source Model? Mysterious Horizon Alpha Emerges, Surpassing Kimi K2 in EQ-Bench Creative Writing Rankings

August 2, 2011 - Technology media outlet WinBuzz published a blog post yesterday (August 1) reporting that a mysterious AI model called Horizon Alpha has emerged on the OpenRouter platform, quickly topping the EQ-Bench Creative Writing charts, and is predicted to be the first open-source AI model for OpenAI. Horizon Alpha was released on July 31st, and has achieved excellent results on the EQ-Bench charts, surpassing Kimi K2 ... -

Smart Spectrum Releases Next Generation Flagship Open Source Model GLM-4.5, Built for Smart Body Applications

July 29 news, yesterday, Smart Spectrum released a new generation of flagship model GLM-4.5, specifically for intelligent body applications to build the basic model. Hugging Face and ModelScope platform synchronization open source, model weights follow the MIT License. 1AI with the official introduction of the main points are as follows: GLM-4.5 in the inclusion of reasoning, code, intelligent body of the comprehensive ability to achieve Open source SOTA, in the real code intelligent body of artificial comparison assessment, the actual test of the best in China; the use of hybrid expert (MoE) architecture, including GLM-4.5 ...- 9.7k

-

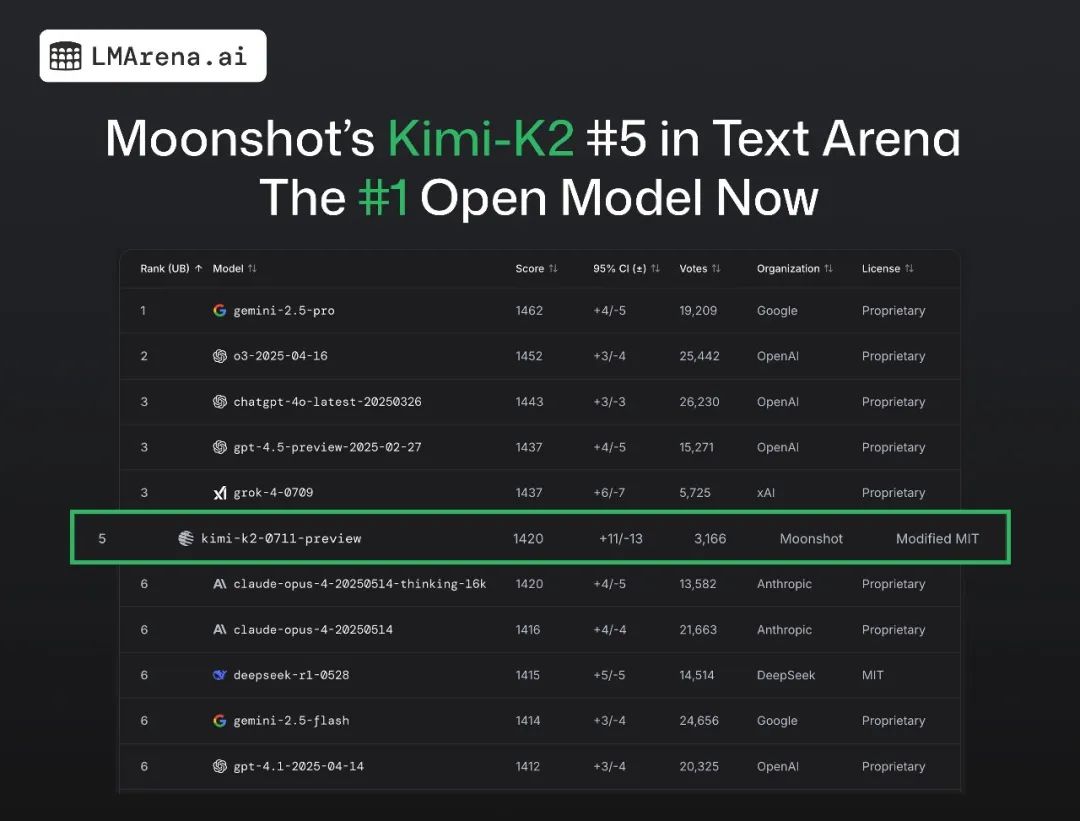

Kimi K2 Takes First Place in Open Source Modeling Over DeepSeek R1

July 19, 2011 - LMArena, the leading ranking of big models, has released its latest rankings, with the recently released Kimi K2 taking the top spot over DeepSeek R1 for open source models. According to LMArena, Kimi K2 took fifth place in LMArena's overall ranking based on its performance and 3,000 community votes. It's worth noting that Kimi K2 and DeepSeek R1 are the two Chinese models in the top 10 of the LMArena charts, but...- 28.6k

-

Flux Kontext local installation and use guide!

Finally waiting for the Kontext open source model. The model's API has been out for some time, the effect is very good. Because the effect is very good, so the feeling of open source hopeless, did not expect an unexpected surprise, open source model suddenly came. This should be the most playable image editing model in recent times. First, a quick look at fluxKontext What are the features: From this highlight, the function of this model is very clear, it is rushed to the intelligent P picture. Here's a quick preview of the effects and application scenarios: Style Transform: Cue: Transform to 196...- 44.9k

-

OpenAI Open Source Model Release Delayed Until Late Summer, CEO Aultman Says "Well Worth the Wait"

June 11 (Bloomberg) -- There's been a new tweak to OpenAI's open source model release schedule. In a post on the X platform on Tuesday, the company's CEO Sam Altman announced that the open-source models, which were originally planned for early summer of this year, are expected to be delayed until late summer and won't be available to the public in June. 1AI notes that in the post, Oltman wrote, "We're going to take a little more time for the open source weighting model, which means that people can expect it in late summer this year, but it won't be in June." He also mentioned that OpenA...- 1.1k

-

Copyright © 2012 Freepik Releases F Lite Open Source Model, 80 Million Datasets Create New Benchmark for AI Mapping

April 30 - Freepik, in partnership with AI startup Fal.ai, has launched its latest open-source AI image model, F Lite, which is unique in that the model is based entirely on the company's in-house dataset of about 80 million commercially licensed and secure content (SFW). Freepik's move circumvents common legal risks and ensures the copyright security of the model's output. Officially, the model utilizes 10B parameter diffusion to provide users with a high-quality image generation experience. F Lite is available in two versions: the standard version (...- 4.7k

-

OpenAI CEO Altman: DeepSeek hasn't affected GPT growth, will push better open source models

April 14, at the recently held TED 2025, OpenAI CEO Sam Altman said, "The emergence of DeepSeek has not affected the growth of the GPT, and better open source models will be introduced." Market research organization App Figures recently reported that in terms of global non-game app downloads in March 2025, ChatGPT overwhelmed Instagram and TikTok with 46 million times, becoming the world's most downloaded (only counting Apple App Store...- 1.6k

-

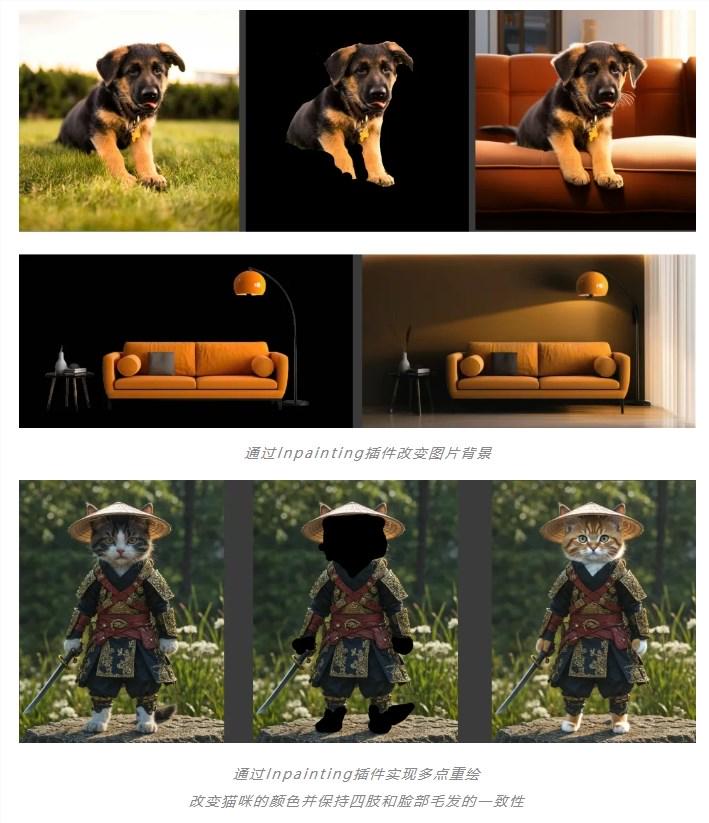

Precise control of graphics! Tencent Hunyuan Wenshengtu open source model launches three ControlNet plug-ins

Tencent HunyuanDiT recently released three new controllable plug-ins ControlNet with the community, namely tile (high-definition magnification), inpainting (image restoration and expansion) and lineart (line draft raw image), further expanding its ControlNet matrix. The addition of these plug-ins enables the HunyuanDiT model to cover a wider range of application scenarios, including art, creativity, architecture, photography, beauty and e-commerce, etc. 80% cases and scenarios provide more accurate...- 10.5k

-

OpenBuddy open source large language model team released the Chinese version of Llama3.1-8B model

Meta recently released a new generation of open source model series Llama3.1, including a 405B parameter version, whose performance is close to or even surpasses closed source models such as GPT-4 in some benchmarks. Llama3.1-8B-Instruct is an 8B parameter version in the series, supporting English, German, French, Italian, Portuguese, Spanish, Hindi and Thai, with context length up to 131072 tokens, and the knowledge deadline is updated to December 2023. To enhance Llama3.1-8B-Instr…- 10.1k

-

Shocking the AI world! Llama 3.1 leaked: an open source behemoth with 405 billion parameters is coming!

Llama3.1 has been leaked! You heard it right, this open source model with 405 billion parameters has caused a stir on Reddit. This may be the open source model closest to GPT-4o so far, and even surpasses it in some aspects. Llama3.1 is a large language model developed by Meta (formerly Facebook). Although it has not been officially released, the leaked version has caused a sensation in the community. This model not only includes the base model, but also benchmark results of 8B, 70B, and 405B with the largest parameters. Performance comparison: Ll…- 6.9k

-

DeepSeek open-sources DeepSeek-V2-Chat-0628 model code and improves mathematical reasoning capabilities

Recently, the Chatbot Arena organized by LMSYS released the latest list update. The LMSYS Chatbot Arena ranked 11th in the overall ranking, surpassing all open source models, including Llama3-70B, Qwen2-72B, Nemotron-4-340B, Gemma2-27B, etc., and won the honor of being the first in the global open source model list. Compared with the 0507 open source Chat version, DeepSeek-V2-0628 has improved in code mathematical reasoning, command following, role playing, etc.- 16.6k

-

Kolors, a Chinese-supported open-source AI painting model, and ComfyUI platform deployment guide

In the wave of AI technology, the large-scale image model Kolors launched by Kuaishou has become a shining star in domestic AI technology with its excellent performance and open source spirit. Kolors not only surpasses the existing open source models in image generation effects, but also reaches a level comparable to commercial closed-source models, which quickly sparked heated discussions on social media. The open source road of Kolors The open source of Kolors is not only a technical milestone, but also a reflection of Kuaishou's open attitude towards AI technology. At the World Artificial Intelligence Conference, Kuaishou announced that Kolors was officially open source, providing a package…- 23.4k

-

Alibaba Cloud: Tongyi Qianwen API daily call volume exceeds 100 million, and corporate users exceed 90,000

At the Alibaba Cloud AI Leaders Summit today, Alibaba Cloud Chief Technology Officer (CTO) Zhou Jingren revealed a remarkable statistic: Tongyi Qianwen's daily API call volume has exceeded 100 million times, the number of corporate users has successfully surpassed 90,000, and the number of open source model downloads has reached an astonishing 7 million times. Zhou Jingren further introduced Tongyi Qianwen's professional performance in the industry. He pointed out that after strict evaluation by a third-party organization, Tongyi Qianwen has gradually approached and approached GPT4 in terms of performance, becoming the industry leader. Especially in terms of knowledge questions and answers in the Chinese context, Tongyi Qianwen has shown...- 12.8k

-

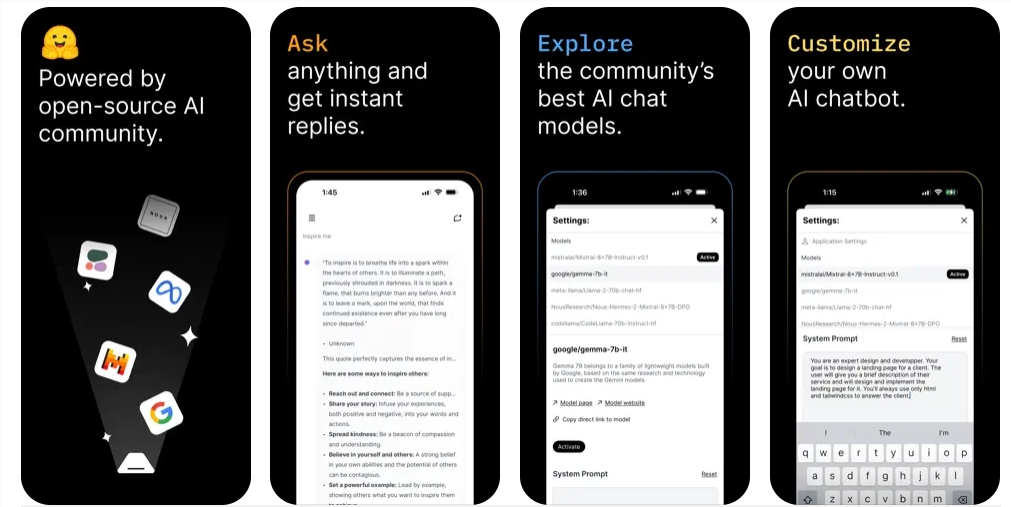

You can use open source models on your mobile phone! Hugging Face releases mobile app Hugging Chat

Hugging Face recently released an iOS client app called "Hugging Chat" to bring convenience to users. Users can now access and use various open source models hosted on the Hugging Face platform on their mobile phones. Download address: https://apps.apple.com/us/app/huggingchat/id6476778843 Web address: https://huggingface.co/chat Currently, the app provides six...- 11.9k

-

Replicate: Running open source machine learning models online

Replicate is a platform that simplifies the running and deployment of machine learning models, allowing users to run models at scale in the cloud without having to deeply understand how machine learning works. On this website, you can directly experience the deployed open source models, and if you are a developer, you can also use it to publish your own models. Core function Cloud model running Replicate allows users to run machine learning models with a few lines of code, including through Python libraries or directly querying APIs. A large number of ready-made models Replicate's community provides thousands of…- 15.8k

-

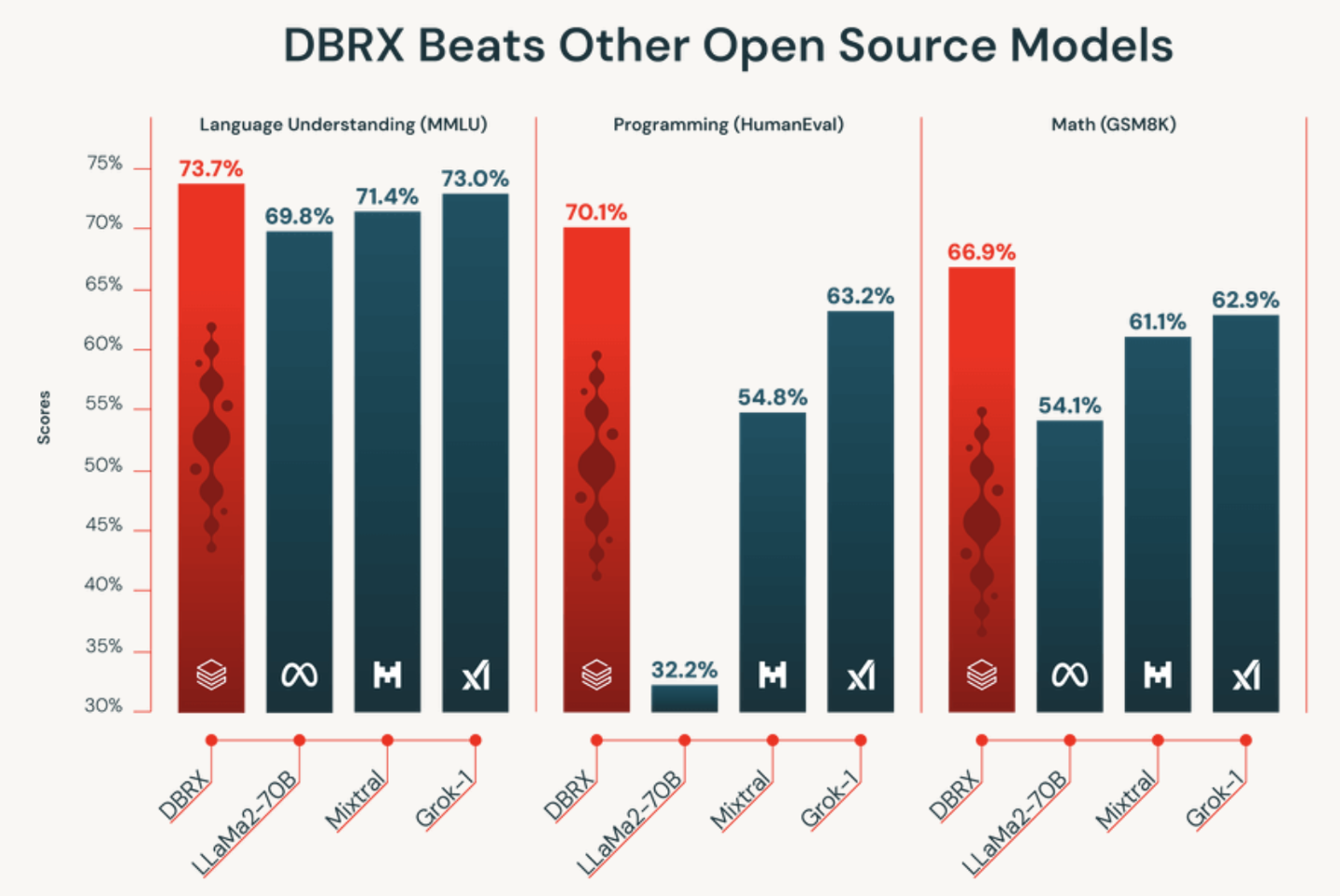

Databricks launches DBRX, a 132 billion parameter large language model, known as "the most powerful open source AI at this stage"

Databricks recently launched a general-purpose large language model DBRX, claiming to be "the most powerful open source AI currently available", and is said to have surpassed "all open source models on the market" in various benchmarks. According to the official press release, DBRX is a large language model based on Transformer, using the MoE (Mixture of Experts) architecture, with 132 billion parameters, and pre-trained on 12T Token source data. Researchers tested this model and found that compared with the market...- 4.3k

-

Ali Tongyi Qianwen open source Qwen1.5-MoE-A2.7B model

The Tongyi Qianwen team launched the first MoE model of the Qwen series, named Qwen1.5-MoE-A2.7B. This model has only 2.7 billion activation parameters, but its performance is comparable to the current state-of-the-art 7 billion parameter model. Compared with Qwen1.5-7B, Qwen1.5-MoE-A2.7B has only 2 billion non-embedded parameters, which is about one-third the size of the original model. In addition, compared with Qwen1.5-7B, the training cost of Qwen1.5-MoE-A2.7B is reduced by 75%, and the inference speed is increased…- 5.6k

-

Mini AI model TinyLlama released: high performance, only 637MB

After much anticipation, the TinyLlama project has released a remarkable open source model. The project began last September with developers working to train a small model on trillions of tokens. After some hard work and some setbacks, the TinyLlama team has now released the model. The model has 1 billion parameters and runs about three epochs, or three cycles through the training data. The final version of TinyLlama outperforms existing open source language models of similar size, including Pythia-1.4B…

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: