I wonder if you've had any of these problems when you've compiled some of the videos:There's always a lot of good footage in the long video, but they're scattered everywhere。

It takes a little bit of time to find the progress strips, to remember the time, to cut them manually in the editing software, and it takes time and effort。

NOW THAT AI CAN READ AND READ THE VIDEO, IS IT POSSIBLE FOR IT TO AUTOMATICALLY PICK OUT THE KEY FOOTAGE FOR ME

The logic of the whole tool is not complex and the process is roughly three steps:

UPLOAD THE VIDEO FILE, LET AI ANALYZE THE VIDEO CONTENT, FIND THE CLIPS THAT ARE WORTH CUTTING, THEN I SELECT THE PART I WANT IN THE INTERFACE, AND THEN I AUTOMATICALLY EDIT AND EXPORT IT IN THE BACK。

But when it's actually done, it's in more detail than I thought, and there's a lot of groundingHere's how I built this application。

THE BIGGEST PROBLEM IN THE IMPLEMENTATION OF THE TOOL LIES IN `AAI SEEING THE VIDEO' AND THEN ANALYSING DIFFERENT TIME SEGMENTS AND WHY, WHICH IS CRITICAL TO THE BASIC ABILITY OF THE VISUAL MODEL。

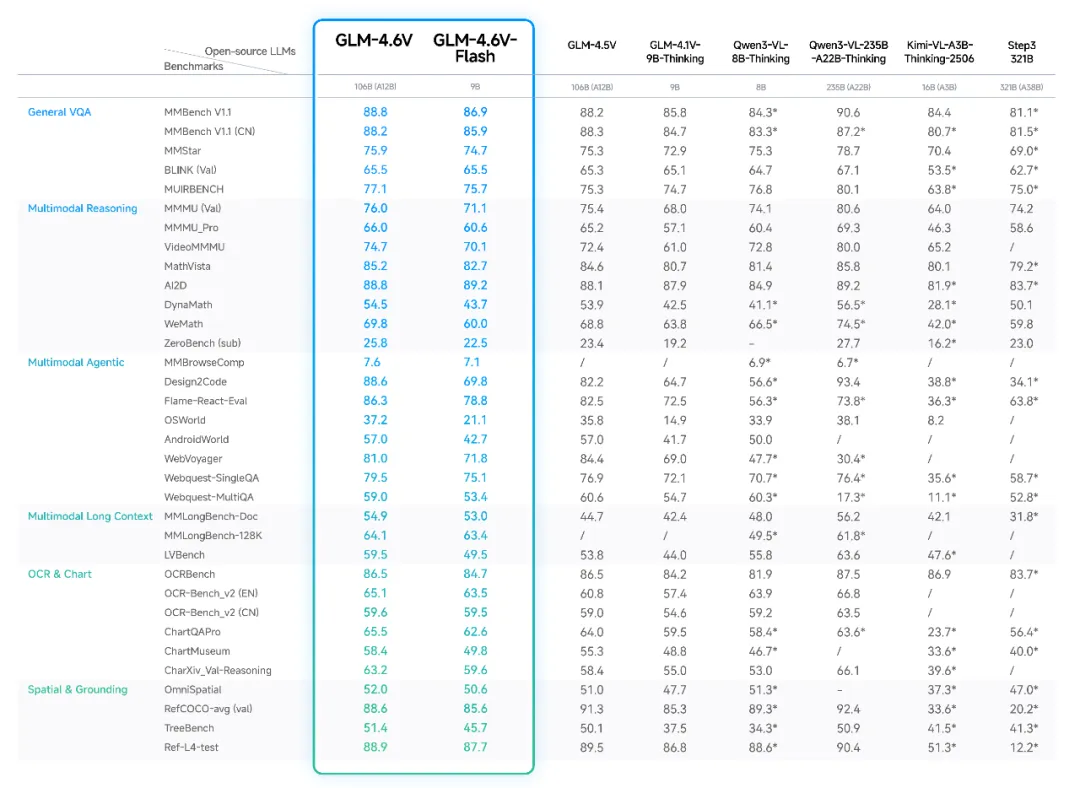

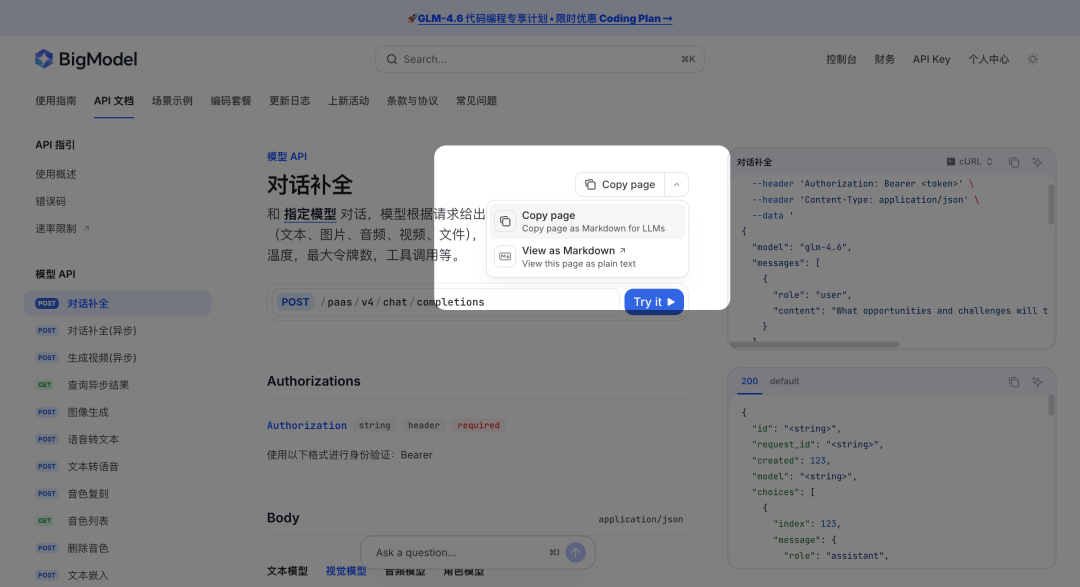

Here's what I needZhipuThe latest open-source GLM-4.6V visual model, which shows in some promotional parameters that GLM-4.6V performed well in the MMBench, MathVista and others 30+ assessment, 128k context (approximately 150 pages of document or 1 hour video), supports "overblind" long video understanding and multi-document analysis。

Before entering the theme or thesis directly into the presentation video, automatically retrieve the graph, verify the quality of the picture, generate the graphics and the popular/research content, or make me more curious, so choose it as the player。

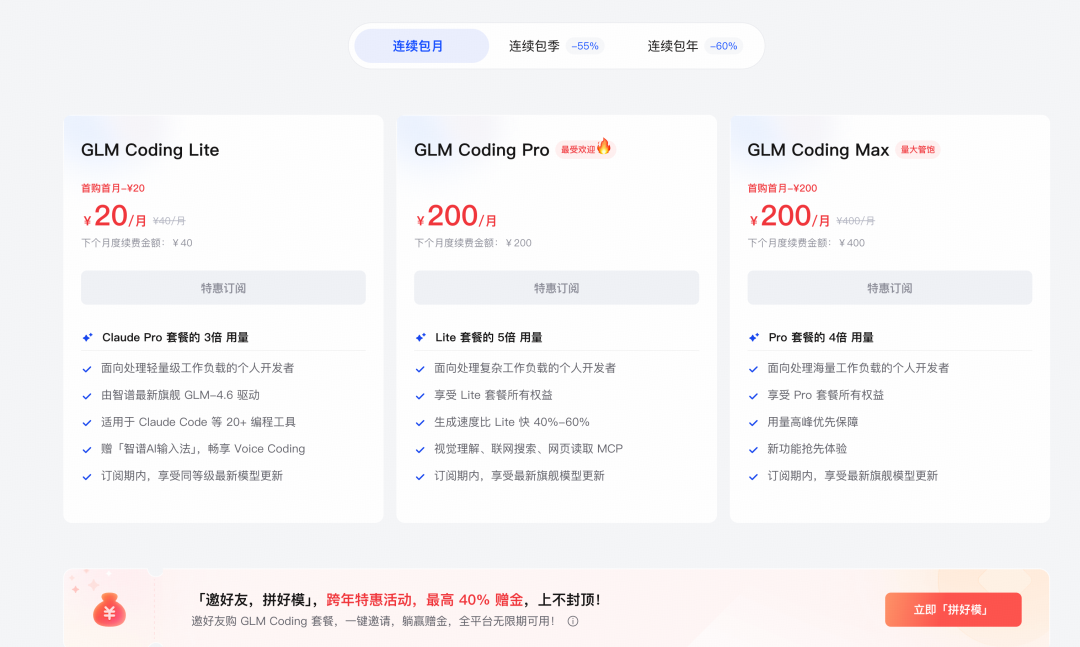

The GMCD Plan, which was purchased at a low price in the previous period, coincided with this GM4.6V, the best partner for this tool。

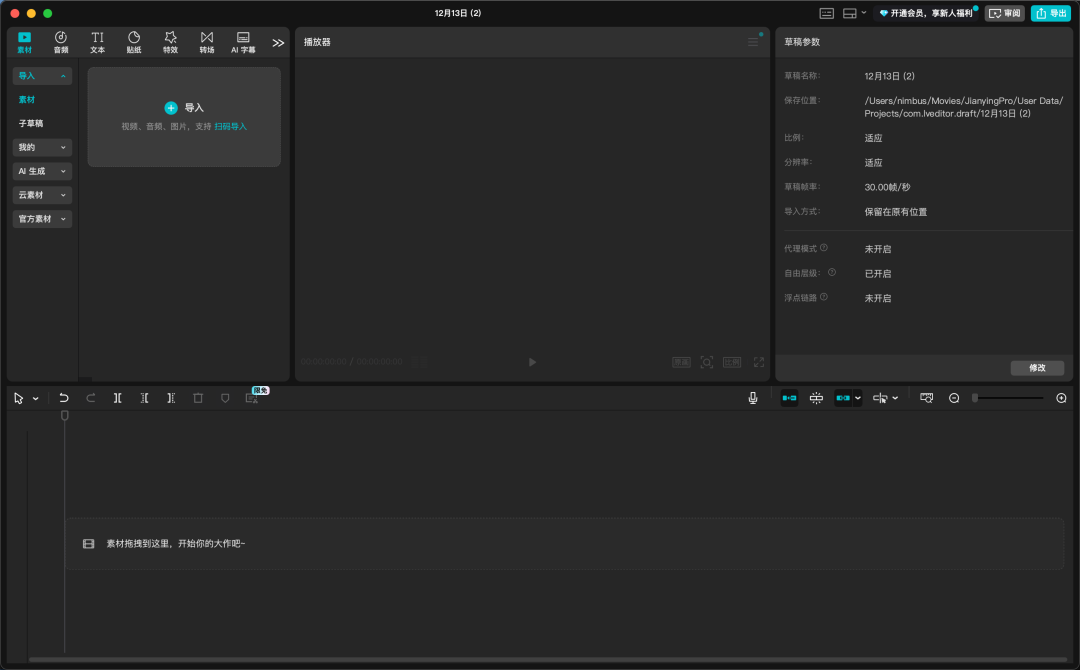

The use of GM 4.6 in Claude Code, Cursor, Cline is not gradually configured here, and interested friends can read the official document note at the textbook level: https://docs.bigmodel.cn/cn/coding-plan/overview

Now that everything's ready, we'll start talking about how to do it。

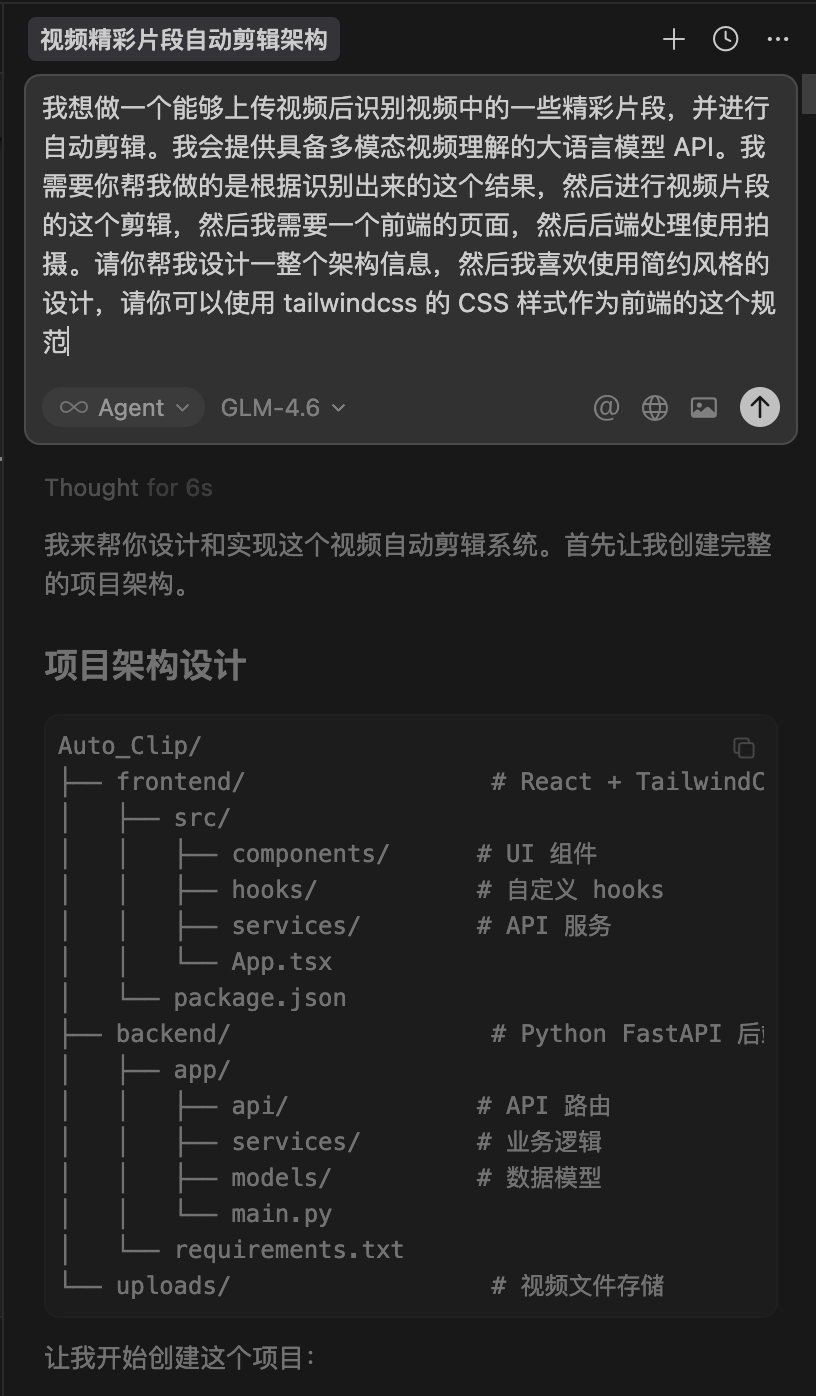

YOU CAN ENTER A DEMAND DIRECTLY AT THE BEGINNING, YOU CAN BE A VAGUE ONE, THEN YOU CAN EXPRESS THE WHOLE LOGIC OF REALIZATION, I ENTER IT DIRECTLY BY VOICE, AND EVEN IF THERE IS SOME CONFUSION, THEN GLM4 4.6 OF THE SPECTRA IS STILL ABLE TO UNDERSTAND MY CLAIM WELL, AND THEN HELP ME DESIGN THE CHART:

- I'd like to make a good video that can be uploaded, recognize some of the good footage, and make an automatic clip. I will provide a large-language model with multimodular video understanding API. I need you to do this for me on the basis of this identification, and then do this clip of the video clip, and then I need a front-end page, and then back-end processing the footage. Please help me design an entire structure information, and then I like to use a simplistic design, so you can use the tailwindcs CSS style as this foreground。

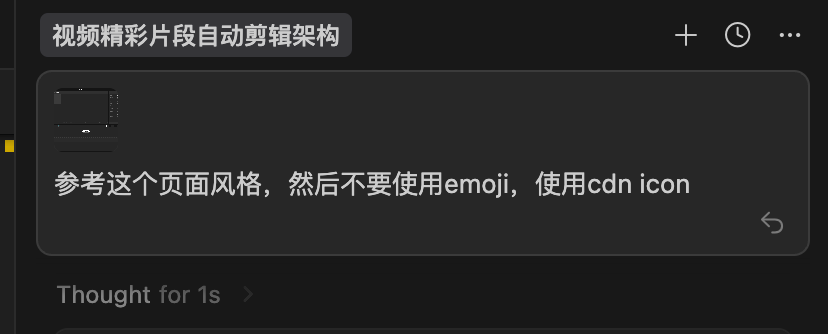

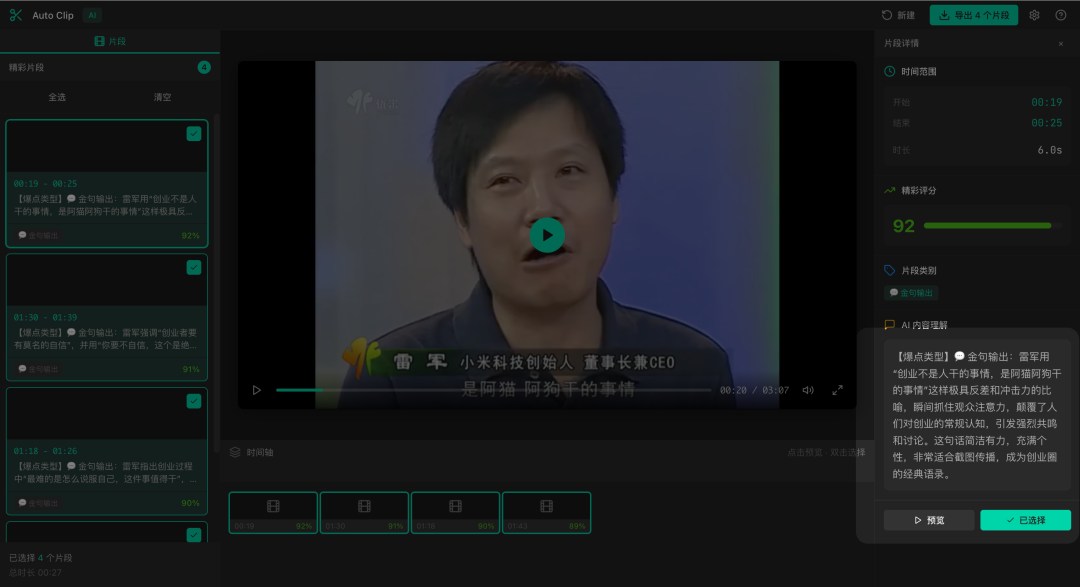

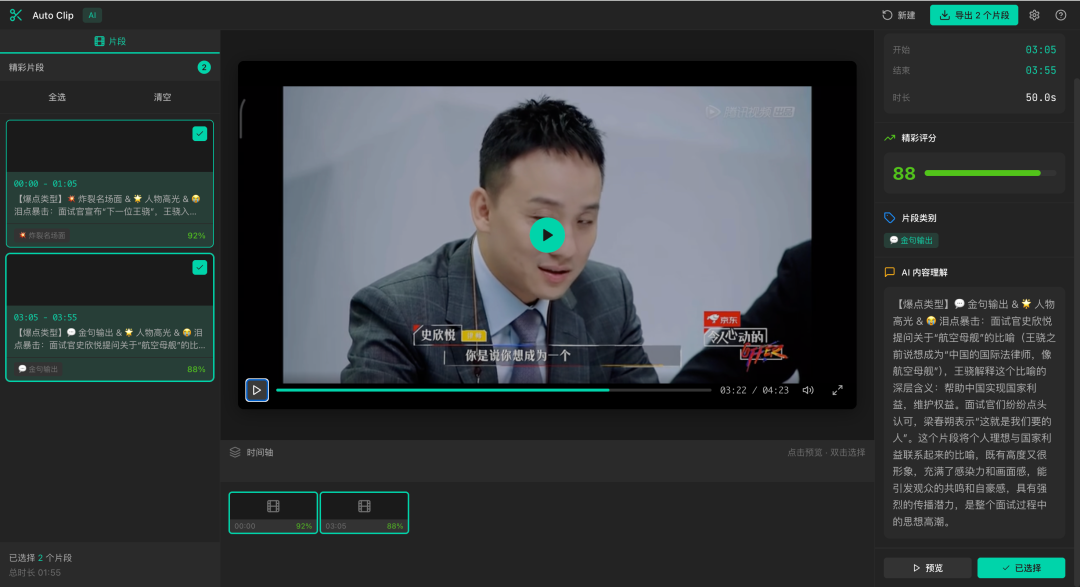

The front end is Tailwind CSS, with a layout reference to the cut:ON THE LEFT IS THE LIST OF CLIPS, WITH THE VIDEO PREVIEW AREA IN THE MIDDLE, AND ON THE RIGHT IS THE MESSAGE OF THE FRAGMENTS ANALYSED BY AI, WHICH IS VERY SIMPLE AND VIOLENT。

Because the Code Plan that we chose to use has the ability to access tools with the GLM 4.6V model, and if I want to adjust the UI, I'll just have to cut it in。

The picture I'm bringing in is actually this one, and then a simple one, because it's a good way to understand the layout you want。

For me, dark themes look more comfortable。

The backend uses Python, which is whether it uses the FFmpeg package at the bottom or not. And the two other back-end services that we need to use are the API capability for visual analysis (GLM 4.6V) and the service capacity to convert the uploaded video into a public web-based OSS address, which will simply give GLM 4.6 access to the relevant interface documents that we provide。

YOU CAN GO STRAIGHT TO THE NETWORK OF INTELLECTUALS AND POST THE GLM 4.6V FILES DIRECTLY TO THE DIALOGUE WINDOW, AND IT'S STILL SIMPLE AND ROUGH。

reference document address: https://docs.bigmodel.cn/api-reference/

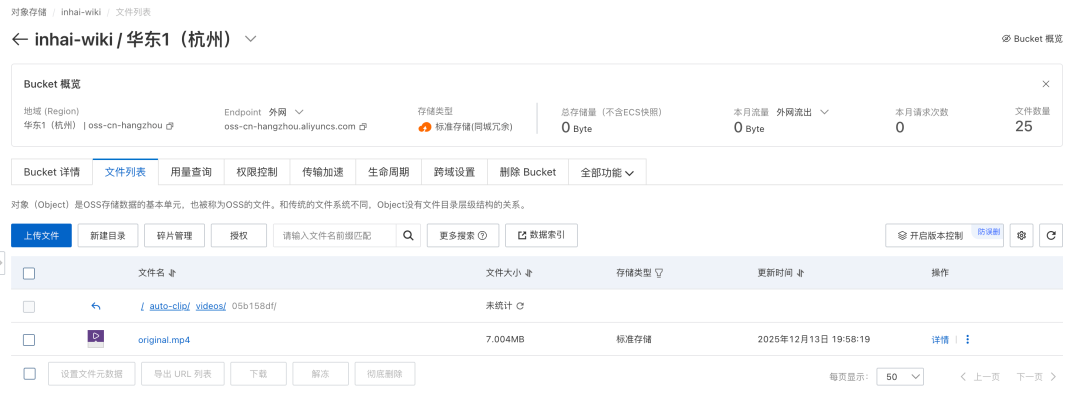

WE FOUND OUT IN OUR TESTING THAT BECAUSE OUR VIDEO IS LOCAL, WE NEED AN ADDRESS THAT CAN BE ACCESSED ON THE PUBLIC WEB IN ORDER TO MAKE OUR VIDEO AVAILABLE TO GLM-4.6V。

Finally:THE USER UPLOADS THE BACKEND AUTOMATICALLY TO OSS → TO GET ACCESSABLE ADDRESSES ON THE INTERNET → TO SEND GLM-4.6V ANALYSIS → TO RETURN THE RESULT OF THE RENDERING。

THE OSC OBJECT OF ARIYUN WAS THEREFORE STORED AND THE VIDEO LINK RETURNED TO THE GLM-4.6V WAS THEN USED。

And as for how to connect and get the corresponding key information, it's done by asking GLM-4.6, and it's in its own training data, there's a docking information on the product, and it's all we need to do throughout the coding process。

EVEN IN THE PROCESS OF ASKING QUESTIONS, YOU CAN GIVE SOME ERRORS DIRECTLY TO THE GLM-4.6, WHICH WILL TEACH YOU VERY CAREFULLY HOW TO SET UP THE RIGHT INFORMATION。

Throughout the process, it was not the code that really took the most time, but rather the hints, which are shared for information。

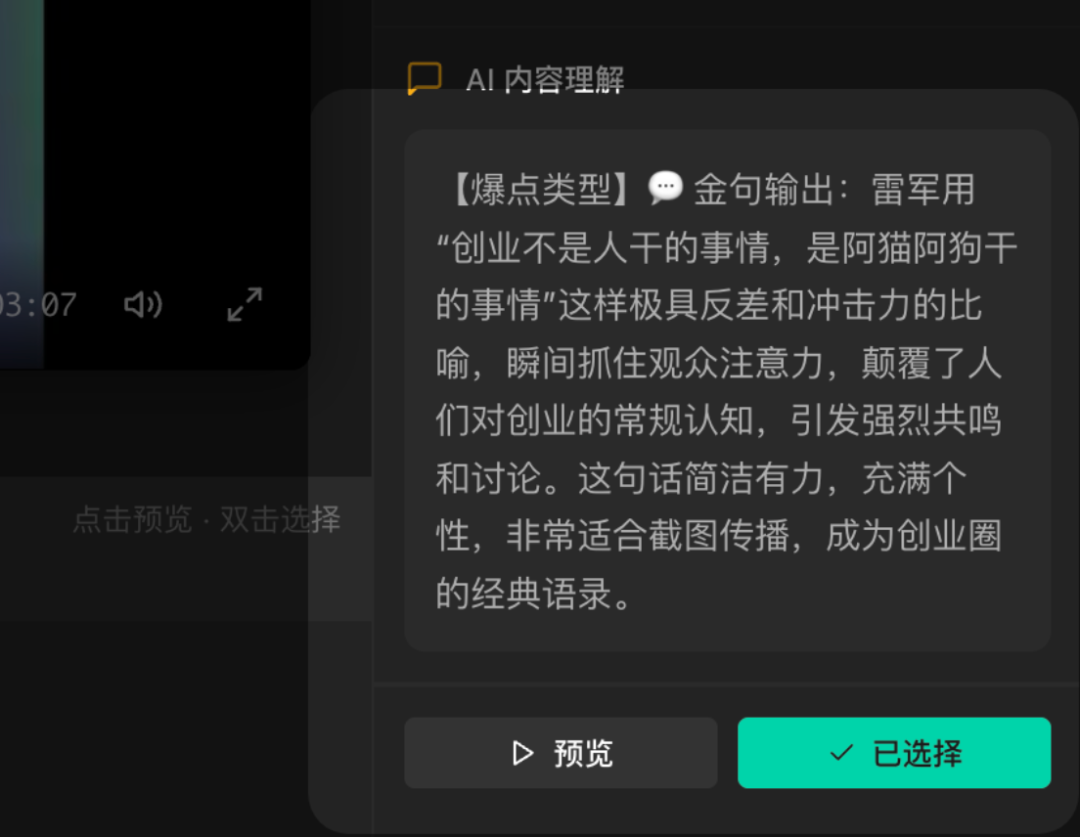

You're a top-level short video operator and a viral content planner. Your mission is to pinpoint from the video high-light footage with a "explosive potential" — something that can stop the audience, react strongly, and share。## 🔥 CORE SCREENING CRITERIA: WHAT IS A "BLOWPOINT"#1T# 💥 FIRST PRIORITY: EMOTIONAL BOMBI'm not sure if I'm going to be able to do this- ** Alarmed/unforeseen**Unforeseen reverses, divine operations, unexpected results- ** laughter**: funny mistakes, God's response, abstract behavior, living scenes- ** moved / tears**: true love, warm moments, tears- ** Anger/controversial**: point of view that triggers the discussion, disproportionate operation, full slots- **Stunny/shock**Visual impact, high-energy images, technology presentations#1T#🎯 SECOND PRIORITY: SPREADING HOOKSIdentification of content that has a viral transmission characteristic:- ** Gold sentence/noun**: A sentence that captures the fine expression that spreads- ** Emoticons package**: Exaggerated expression, magic action, reaction lens- ** imitation material**: Interesting footage that can be replayed in two- ** Topic Detonation Point**: elements that could trigger discussion in the comment area- ** Suspended / hook**: Makes you want to see the "over and over."#1T1T# ⚡ THIRD PRIORITY: RHYTHM HIGH ENERGYTo identify high-density, high-paced clips:- ** High-energy early warning**: intensive laughter/explosion continuous output- ** beat turn**: sudden acceleration, wind mutation, contrast- ** Climax hour**: Top of the story, duel, answer- ** BGM POINT**: The light of music and the image is perfect#1T# 🎬 FOURTH PRIORITY: CONTENT PRECISIONVideo core value enrichment:- **Dry goods **: most valuable point of knowledge/skills- ** Product highlights**: most attractive feature/characterization- ** High profile**: the decisive moment of glamour/power- **Story core**: I can't read the key to the whole video## 📊 POPPOINT SCORING CRITERIA| fractional range | explosive point level | standard ||---------|---------|------|| 0.9-1.0 | S | ABSOLUTE EXPLOSIVE: IRRESISTIBLE SHARING, CUT-OFF, |2| 0.8-0.89 | A-CLASS | HIGH-ENERGY TIME: A VISIBLE EMOTIONAL PEAK OR MEMORY POINT|0.7-0.79 |B| 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩 精彩|0.6-0.69 |C | GENERAL CONTENT: OF VALUE BUT LACK OF COMMUNICATION< 0.6 | D | TRANSITION CONTENT: NO CUT RECOMMENDED**Note: Only fractions of 0.7 minutes or more are exported, but do not output below this fraction! **## 📝 OUTPUT FORMAT``json{"highlights":{"start_time": 10.5,"end_time": 25.0,"description": "detailed description of [explosive point type]: 1) what happened (2) why is the suitable use of the scene at the blast point (emotional point/transmission point) (3) (seismic beginning/climax/end/separate)" i don't know,"score": 0.92,"category": "category."}]}```##🏷️ CLASS LABEL (MUST CHOOSE FROM THE FOLLOWING):| Category | Applicable scene ||-----|---------|♪ Bang, bang, bang ♪♪ Bang, bang, bang ♪♪ Bang, bang, bang, bang ♪♪ ♪ In the back of God ♪| 💬 💬 | | | | | 可 可 可 可 精 精 精 精 |♪ Bang, bang, bang ♪It's a high-power early warning, a high-intensity outputIt's the most valuable core content♪ The light of the man ♪♪ Controversy ♪It's a very powerful image♪ Bang, bang, bang, bang ♪## ⚠️ IMPORTANT REQUIREMENTS1. ** I'd rather have nothing to lose**: Select only the real blast, not the count. A 10-minute video could only have two to five real flashpoints2. ** Precision card points**Time is stuck to the full wave of emotion/content, do not interrupt3. ** Detailed information**: The description needs to be clear: "Why is this a flashpoint" instead of a simple picture4. ** Moderate duration**: Recommendations for short video blast spot 8-30 seconds, maximum 60 seconds5. ** To avoid flatness**: Transition, mating, daily dialogue, etcPLEASE WATCH THE VIDEO CAREFULLY AND SCREEN IT AS THE CORE CRITERION. RETURNS ONLY THE JSON FORMAT RESULT, NO OTHER TEXT。

AT THE BEGINNING, I ASKED AI TO "GET A GOOD CLIP," AND IT GAVE AN ANALYSIS THAT WAS COMPLETELY BROAD, AND WHAT WAS THE "SCIENTIFIC" "RICH" JUDGEMENT OF NO VALUE。

I then changed the term, instead of “excellent”, to “explosive point”, the kind of footage that people would stop and share。

A number of specific criteria have been added to the hints, such as whether there is an emotional outbreak, whether there is a golden sentence, whether there is a reversal, whether it is not appropriate to start short videos, and, in particular, to emphasize that there is no need to give me points below 0.7。

WITH THIS ADJUSTMENT, AI HAS CLEARLY RELIED ON MUCH OF ITS JUDGEMENT。

Not only does it tell me which clips are worth cutting, but it also gives reasons for changes in rhythm, emotional orgasms, or is the word particularly contagious, all of which make the selection process more relevant。

I'm comfortable with this tool。

A VIDEO OF SEVERAL MINUTES, UPLOADED AND ANALYSED BY AI, WILL MARK ALL THE CLIPS WORTH CUTTING IN A FEW MINUTES AND PRODUCE THE FINISHED PRODUCT IN A FEW MINUTES. IT'S MUCH MORE EFFICIENT THAN IT USED TO BEAnd the last clip I've edited, I expect it to be better than bad, with only high-quality output。

IF YOU'RE DOING SOMETHING LIKE THIS, OR IF YOU WANT TO LEARN HOW TO INTEGRATE AI INTO YOUR OWN TOOLS, THIS PROJECT MIGHT GIVE YOU SOME INFORMATION。

And then if you haven't found the right programming tools and basic models, you go straight to Coding Plan and it's done. A whole set of solutions is open, and it's very expensive。

In addition, if your GLM-4.6V model analysis resources are insufficient, you can go directly to the Specialized Zone to buy this $5.9 GLM-4.6V preferential package, and 10 million tokens are used more than enough on a daily basis。

AI ' S CAPACITY IS GROWING, BUT IT REMAINS A TOOL, AND THE KEY IS TO THINK CLEARLY ABOUT ITS NEEDS BEFORE DECIDING WHICH STEP TO TAKE。

For me, the most time-consuming part of this tool is not “clips”, but “strips”。

AI HELPED ME AUTOMATE THE MOST MECHANICAL, TIME-CONSUMING PART, AND I STILL HAVE THE FINAL DECISION。

I THINK IT'S PROBABLY THE WAY I THINK I'M REALLY USING AI。

That's all about sharing this. I'm Silver. I'll see you next time