January 21st.Step StarAnnounceMultimodal Model Step3-VL-10B Open SourceI don't know. It was described that with only 10B parameters, Step3-VL-10B had reached the same scale SOTA in a series of benchmark tests such as visual perception, logical reasoning, mathematical competitions and general dialogue。

1AI HAS THE FOLLOWING OFFICIAL INTRODUCTION:

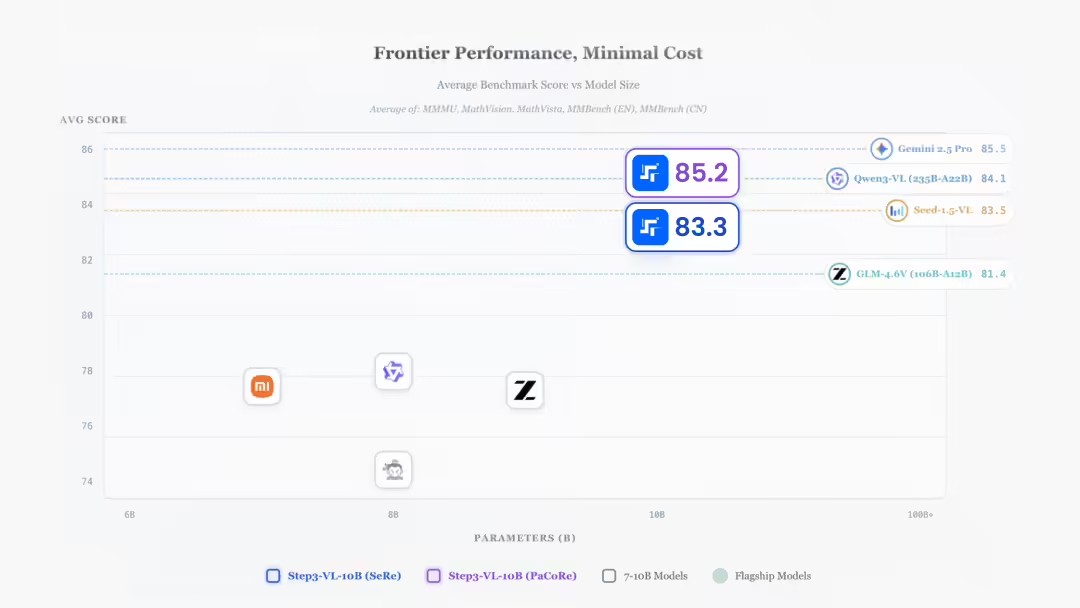

Capable of exceeding 20 times the size of a model, step 3-VL-10B multimodular "Step3-VL-10B" open source

Using only 10B parameters, Step3-VL-10B achieves SOTA levels in a range of benchmark tests, such as visual perception, logical reasoning, mathematical competitions and general dialogue, and solves the industrial dilemma of small parameters and high intelligence levels。

In a number of key assessments, we have observed that Step3-VL-10B is even larger than the size 10-20 times source model (e.g. GLM-4.6V 106B-A12B, Qwen3-VL-Thinking 235B-A22B) and top-class closed-source flagship model (e.g. Gemini 2.5 Pro, Feed-1.5-VL)。

BASED ON SUCH A SMALL AND POWERFUL BASE, IT WOULD HAVE BEEN POSSIBLE TO SINK INTO MOBILE PHONES, COMPUTERS AND EVEN INDUSTRIAL EMBEDDED DEVICES ONLY BY COMPLEX MULTIMODULAR REASONING (E.G., GUI OPERATIONS, COMPLEX DOCUMENT RESOLUTION, HIGH PRECISION COUNTS)。

Base Opens with Thinking Model, welcome to download experience

- Project home page: https://stepfun-ai.github.io/Step3-VL-10B/

- paper link: https://arxiv.org/abs/2601.09668

- HuggingFace: https://huggingface.co/collections/stepfun-ai/step3-vl-10b

- ModelScope: https://modelsscope.cn/collections/stepfun-ai/step3-VL-10B

10B PARAMETER, 200B PERFORMANCE

Step3-VL-10B has three core points:

- Extremely visual sense pole: Shows the highest level of recognition and sensitivity in the same parameter scale. By Introduction PacoRe (parallel coordination reasoning) MECHANISMS, THE MODEL ACHIEVES A QUALITATIVE LEAP IN THE RELIABILITY OF COMPLEX, HIGH-PRECISION OCRS AND SPACE-EXPANDING UNDERSTANDINGS FOR DIFFICULT TASKS。

- Deep logic and long-range reasoning: TO BENEFIT FROM ENHANCED LEARNING (RL)Step3-VL-10B has achieved a step-step in cross-mission reasoning on a scale of 10B. Whether it's a race-level mathematical puzzle, a real programming environment or a visual logic puzzle, the model works wellThe multistep thinking chain leads to the final answer。

- Power Side Agent Interactive: Based on mass GUI SPECIALIZED PRE-TRAINING DATA, the model is able to accurately identify and operate complex interfaces, and becomes the core engine of the end-side Agent。

Step3-VL-10B provides two paradigms of Sere (sequence reasoning) and PaCoRe (parallel coordination reasoning), all of which achieve excellent scores in the core dimensions of STEM reasoning, identification, OCR & documentation, GUI Grunning, spatial understanding, code, etc., and are better performed in the PaCoRe paradigm。

STEM/MULTIFORM REASONING

STEM (Scientific, Technical, Engineering, Mathematics) and Multi-Multimorphal Research are the core dimensions of measuring the model's “deep intelligence”。

Step3-VL-10B goes beyond the GLM-4.6V, Qwen3-VL models in MMMU, MathVision。

2. Math of competition

Step3-VL-10B performance is particularly strong in terms of mathematical dimensions. On the subject of the AIME 25/24 and other mathematics competitions, the world's first class was reached with an almost full score。

This means that Step3-VL-10B has the ability to think of top human mathematics contestants, and is even better at logic than many hundreds of billion-class models。

3, 2D/3D SPATIAL REASONING

Step3-VL-10B demonstrates excellence in multiple spatial reasoning benchmarks, especially where precision is required and complex logic is combined BLINK, CVBnch, OmniSpatial and ViewSpatial In the case of tests, their performance significantly exceeds the model of the same size。

4. Code

In a real, dynamic programming environment, Step3-VL-10B transcends many world-class multi-modular models。

Real cases

In the real-use scenario, Step3-VL-10B's multi-modular reasoning capability covers GUI perception, visual recognition and reasoning。

- Case I: Morse reasoning

- CASE II: GUI PERCEPTIONS

- Case III: Logic

Why? Three key designs

The ability to achieve the above performances has benefited from Step3-VL-10B ' s unique design in three ways:

1. All-parameter end-to-end multi-modular joint pre-training• DISAPPEARING THE TRADITIONAL TRAINING PARADIGM OF PHASED-FREEZING MODULES AND CONDUCTING ALL-PARAMETRIC JOINT TRAINING DIRECTLY ON 1.2T HIGH-QUALITY MULTI-MODULAR DATA SETS. THIS APPROACH ALIGNS VISUAL FEATURES WITH LANGUAGE LOGIC IN DEPTH IN THE LOWER SEMANTIC SPACE AND CREATES A HIGHLY SENSORY CAPACITY FOR THE MODEL AND COMPLEX CROSS-MODULAR REASONING BUILDING BLOCKS。

2. LARGE-SCALE MULTIMODULAR INTENSIVE LEARNING (RL) EVOLUTION• Take the lead in introducing large-scale intensive learning into a multi-modular field, which has been optimized more than 1,400 times. Models achieve qualitative leaps in their capabilities in dimensions such as visual recognition, mathematical logical reasoning and general dialogue, and experimental data indicate that model performance is still on the uplink and has not reached saturation boundaries。

3. Parallel coordination reasoning mechanism (PaCoRe): Innovative introduction of the PaCoRe mechanism to support dynamic calculus expansion at the reasoning stage. By exploring multiple sensory scenarios in parallel and multidimensional evidence aggregation, the mechanism significantly enhances the accuracy of the model in competitive mathematics, complex OCR identification, precision object count and space popping reasoning。

Thanks to the “triple” architecture, Step3-VL-10B demonstrates that the level of intelligence does not depend entirely on the size of the parameters。

Based on better quality, more targeted data construction, and a systematic post-training and enhanced learning strategy, it is important to build a better and more targeted data baseLEVEL 10B MODELS ALSO HAVE THE ABILITY TO COMPETE WITH 10-20 TIMES THE SIZE OF A MODEL IN MULTIPLE BASELINE TESTS, OR EVEN ACHIEVE INVERSE SUPERPOWERS.

This also means:The world's first-class multi-modular capability is expected to be obtained at lower cost and less calculusAt the same time, the past was dominated by cloud super-intelligenceDown to the side. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 。

So far, we have an open source, Step3-VL-10B (including Base and Thinking models), and you are welcome to discuss and share with us, and open-source communities are welcome to fine-tune our models and work together to drive small models to make smart leaps