February 11th.Ant GroupOpen SourceRelease Full MotionLarge Model Min-Flash-Omni 2.0. In a number of open benchmarking tests, the model has been prominent in key competencies such as visual language understanding, voice-controllable generation, image generation and editing。

According to the introduction,Ming-Flash-Omni 2.0 is the first industry-wide audio unified generation model that generates both voice, environmental sound and music in the same trackI don't know. Users can exercise precision control over sound, speed, tone, volume, emotions and dialects by using only natural language instructions. The model achieved a very low reasoning frame rate of 3.1 Hz at the reasoning stage, a real-time high-level security generation of minute-scale long audio, and an industry leader in reasoning efficiency and cost control。

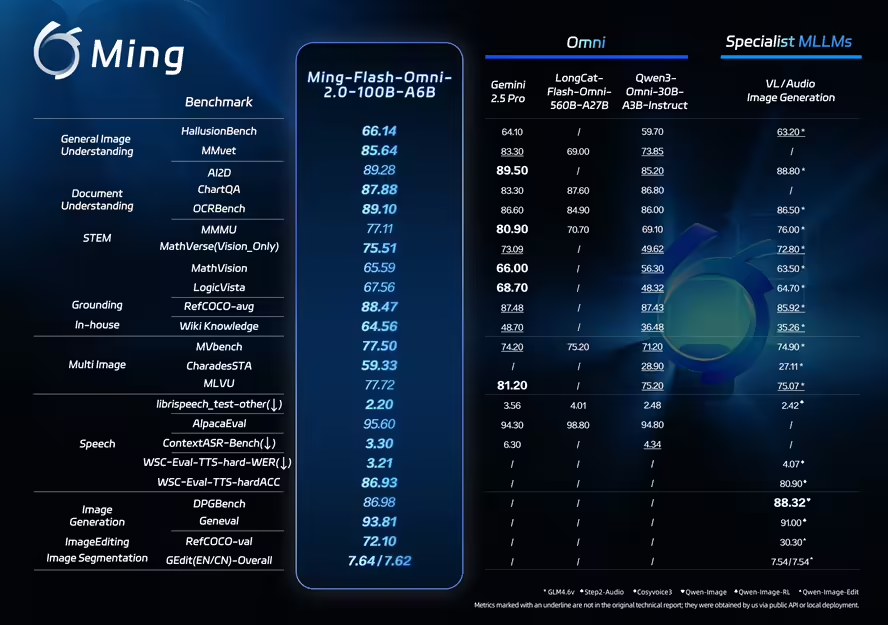

It is widely accepted within industry that a large, multi-modular model will eventually move towards a more integrated structure that allows for deeper synergy between the different modes and the mission. The reality, however, is that “full-temporal” models are often difficult to use at the same time: open-source models are often less than specialized models for specific individual capabilities. The Ming-Omni series has evolved in a context in which the ants group has been investing for many years in a whole-state direction: the early version builds a unified multi-modular capability base, the medium-term version validates the increase in capacity resulting from scale growth, and the latest version 2.0 optimizes the full-modular understanding and generation capacity to open-source lead levels and goes beyond top-level specialized models in a number of areas。

This time, the Ming-Flash-Omni 2.0 open source means that its core competencies are released externally in the form of a “reversible base” to provide a unified capability portal for end-to-end multi-modular application development。

According to 1AI, Ming-Flash-Omni 2.0 is based on Ling-2.0 architecture (MoE, 100B-A6B) training and is fully optimized around the three main objectives of “more visible, better heard and more stable”. Visually, the integration of hundreds of millions of levels of fine grain data and difficult training strategies has significantly improved the ability to identify complex objects, such as near animals and plants, process details and rare relics; audioly, through the achievement of voice, sound, musical homogeneity and support for natural language precision control of sound, speed, emotions, etc., and with a zero sample of sound cloning and customization; and imagely, by enhancing the stability of complex editing, by supporting such functions as photo-adjustments, sceneal replacements, optimization of person postures and one-key drawings, and still maintaining image consistency and detail in dynamic scenarios。

According to Zhou Joon, the key to full-model technology is the deep integration and efficient deployment of multi-modular capabilities through a unified architecture. After the open source, the developers can significantly reduce the complexity and cost of multi-model chains based on the same set of re-use visual, voice and generation capabilities. In the future, the team will continue to optimize video time-series understanding, complex image editing and long audio generation in real time, refine the tool chain and assessment system, and promote the scalability of full-temporal technology in actual operations。

Currently, the model weights and reasoning codes of Ming-Flash-Omni 2.0 have been published in open-source communities such as Hugging Face. Users can also experience and call online via Ling Studio on the official ants platform。