Domestic AI Big Model DeepSeek Why is DeepSeek so hot? It has topped the AppStore free app charts in several countries.

First: free and good

For computer use, you can directly visit DeepSeek's official website, and for cell phones, you can download it from major app markets, all free of charge.

- Official website: https://chat.deepseek.com/

- App: search for "DeepSeek" in the app marketplace

utilization effect

First of all it's very simple to use, no need for complicated prompt word tricks (remember to check the [Deep Thinking] mode). For example, I asked it to: write a statement of refusal to work overtime in Lu Xun's tone of voice

First of all, it will first think in depth, the entire depth of the thinking process: from the analysis of user needs to how to write, and finally there is a check mechanism, I have to say that this thinking is really very deep, to the editorial 23 days can not come up with, and it only took 23 seconds!

And finally, look at the thought-out output

I have to say, this statement is absolutely brilliant! It compares overtime work to "demolishing and selling souls", and the statement is said to be "working hours book cut by yellow table paper", which accurately hits the pain point of workers. With the old allusion to scold the new world, every sentence is we want to say dare not say the hard words, the last sentence "people have to stand and walk" directly sealed God, can be called the modern social animals of the literary language mouth for.

How can we use inference models better?

Two tips for using it are mentioned in this article, "o1 isn't a chat model (and that's the point)":

Original: https://www.latent.space/p/o1-skill-issue

1. Providing rich background information

Use the inference model as if you were telling a friend about a difficult problem in detail, giving it all the relevant details such as the background of the problem, the approaches you have tried, and so on.

Case:

You want to re-plan your small garden at home, so you can say something like this, "My small garden is about 20 square meters in size and is irregularly shaped. Currently there are some flowers planted in it, but the layout is a bit messy. Before I tried to plant according to the color zoning, but the effect is not very good, it looks a little messy. I would like to create a small garden with character and beauty, and usually grow some vegetables. I prefer a natural and fresh style with a limited budget."

2. Focusing on objectives and clarifying outputs

Just tell the inference model what you ultimately want the result to be, never mind exactly how it does it.

Case:

"I need a plan for small garden re-planning, including plant layout, vegetable planting area division, overall style design, to reflect the natural and fresh style, and to consider low cost."

Second: open source technology

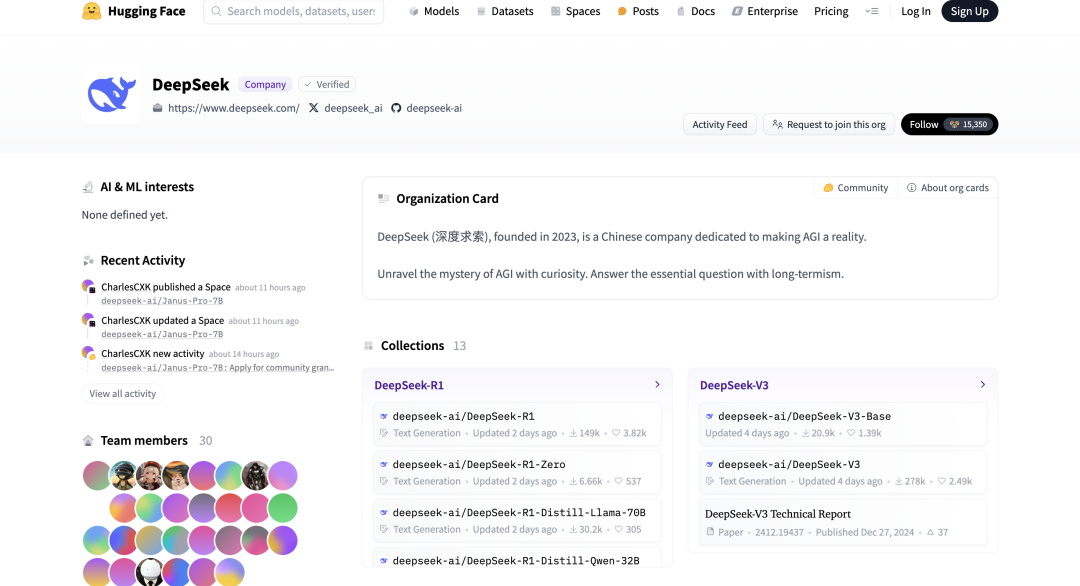

DeepSeek model open source License unified standardized, loose MIT License, completely open source, no restrictions on commercial use, no need to apply.

- Open source address: https://github.com/deepseek-ai/DeepSeek-LLM

- HuggingFace Link: https://huggingface.co/deepseek-ai

technical strength

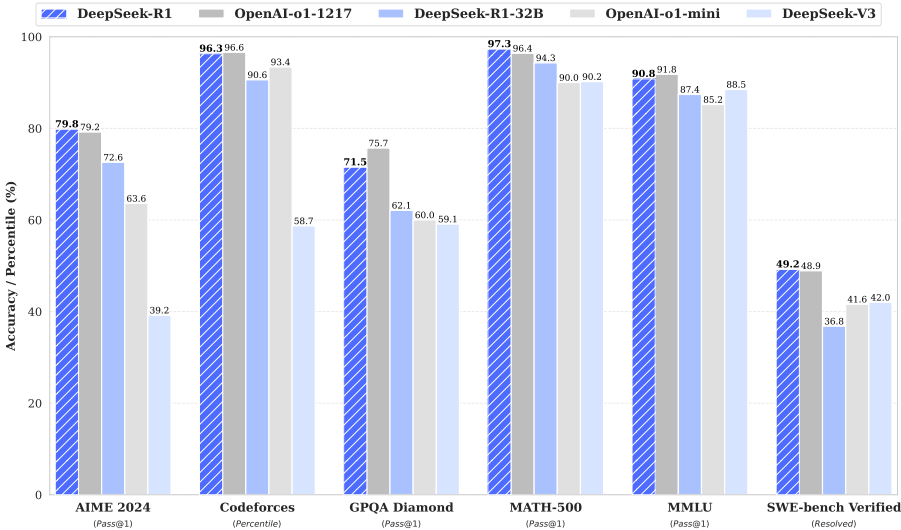

DeepSeek-R1 uses reinforcement learning techniques on a large scale in the post-training phase, which greatly improves the model inference ability with only a very small amount of labeled data. The performance is comparable to that of the official OpenAI o1 version in tasks such as math, code, and natural language reasoning.

Thesis: https://github.com/deepseek-ai/DeepSeek-R1/blob/main/DeepSeek_R1.pdf

DeepSeek-R1 further improves the inference performance by introducing 'cold-start data' and 'multi-stage training' to reach a level comparable to OpenAI-o1-1217. In addition, the inference power is successfully transferred to smaller and denser models through the 'distillation technique', which significantly improves the inference performance of these models.

- Cold start data:

- DeepSeek-R1 uses a small amount of high-quality "cold-start" data for fine-tuning before starting large-scale reinforcement learning. This data helps the model get to a better state quickly, reducing the number of iterations and time required to train from scratch.

- Multi-stage training:

- DeepSeek-R1 employs a multi-stage training process. First, reinforcement learning is used to enhance the model's inference; then, further fine-tuning is performed in conjunction with supervised data as convergence is approached. This approach ensures that the model's performance on different tasks is optimized.

- Distillation techniques:

- Distillation is a technique for transferring knowledge from a large model to a smaller model.DeepSeek-R1 acts as a teacher model, generating a large number of samples for training. These samples are used to fine-tune the smaller models so that they can inherit the reasoning capabilities of the larger models. This approach saves computational resources by not requiring large-scale reinforcement learning on small models.

DeepSeek-R1 with equal performance API The price is dozens of times cheaper than the OpenAI o1 API price, which is the king of price/performance ratio!

If you want to incorporate it into your business you can use the API approach

How do I access the DeepSeek-R1 API?

- Go to DeepSeek official website

- https://www.deepseek.com/

- Register and log in to your account to access the working back office

- https://platform.deepseek.com/usage

- Select the API menu to create API Keys and copy and save them.

- API Documentation Address

- https://api-docs.deepseek.com/zh-cn/guides/reasoning_model

The inference model will output a piece of thought chain content before the model outputs the final answer to improve the accuracy of the final answer.

During each round of dialog, the model outputs the thought chain content (reasoning_content) and the final answer (content). In the next round of dialog, the thought chain content output from the previous rounds is not spliced into the context, as shown in the following figure:

In use deepseek-reasoner Please upgrade the OpenAI SDK to support the new parameters.

pip3 install -U openai

The following code, in Python, shows how to access thought chains and final answers, as well as how to contextually splice in multiple rounds of dialog.

from openai import OpenAIclient = OpenAI(api_key="", base_url="https://api.deepseek.com")

# Round 1messages = [{"role": "user", "content": "9/11 or 9.8, which is bigger?"}]response = client.chat.completions.create( model="deepseek-reasoner", messages=messages)

reasoning_content = response.choices[0].message.reasoning_contentcontent = response.choices[0].message.content

Summarize

It's no coincidence that DeepSeek has exploded in popularity.Technological inclusion is never an empty word, but a free spirit engraved in every line of open source code, and an unfading promise in the wave of technological affirmative action..