Share a new guy's first real fightAL COMICProduction process, very detailed, full-scale goods

1. On topics

It was interesting to see a video of a television drama or a novel character from a first person's point of view on short videos. Just a long time ago, when I saw "The Great Sword in the Snow," I thought I was going to do a video exercise with a character I liked very much。

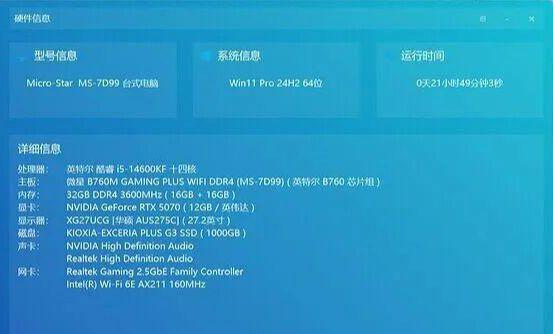

2. Operational equipment and tools

IN ORDER TO SAVE MONEY, I TRIED TO USE SOME LOCALLY DEPLOYED MODELS IN THE VIDEO PRODUCTION PROCESS, SO I HAD SOME HARDWARE REQUIREMENTS. I'M USING A 12-G VISIBLE CARD MYSELF, A PASS LINE。

The tools used this time are a lot of things, so let me just list them briefly:

tool

- Script text: mainly with ChatGPT 5.2 and Gemini 3 Pro。

- Generating pictures: Mainly MidJourney, supported by Nano Banana Pro。

- Generating video: ComfyUI + Wan2.2 I2V 14B Q6_K was deployed locally to save money。

- Phrase: Locally deployed Index-tts。

- Later clip: Clip。

- Frames and painting enhancement: Local ComfyUI (RIFE and SeedVR2) was used, but the car was turned over (detailed later) and it was actually cut-and-run。

The overall process is probably as follows:

Summarize relevant information > confirm the oral script > determine the image of the person (text) > determine the lens > generate an oral audio > Personal Image Chart > Generate Key Frame > (Specific Description + Key Frame) Generate Dynamic Video Snippet > Clip > Complement Frame > Overrated

The full ChatGPT chat, the full text is a little long, you need to contact to get it, and the home page is available。

3. From text to lens

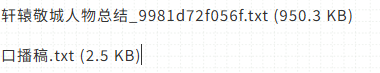

IT'S A LONG TIME AGO. SOME OF THE DETAILS ARE HARD TO REMEMBER. SO I GOT GPT ONLINE TO SEARCH FOR THE STORY OF THE CITY AND GIVE ME A SUMMARY。

After being summed up, it produced me a set of oral scripts, and made some changes to my own memory, and then Gemini made a proofreading and revision, and eventually received the final text。

Next, in the same GPT dialogue box (remembered in context), he was given the opportunity to create an image of the core story character, which included four characters: the main character, the wife, the daughter, the ancestor. Let GPT generate the English version of MidJourney。

And get GPT to produce a spectroscope based on the oral text

You're now a professional video director + SMS scripter + Midjourney visual tipper expert。

Based on the latest version of the text generated above, please generate a spectroscopy based on the text ** fully aligned with the sentence**。

[The required output is as follows]

Please organize the final content into a spectroscope, with one or more shots in each sentence, which must be complete。

Each shot must contain the following fields (the field order is as follows):

1. ** Mirror numbers** (e.g., Scene 01, Scene 02...)

2. ** Suggested duration** (seconds, extrapolated at speed)

3. ** scene** (indoor/outdoor, location, environmental characteristics)

4.** Person(s)**

5. ** Perspectives** (e.g., feature, view, medium, vision, topography, viewing, subjectivity)

6.** Image style** (e.g., fact sheets, film class, warm tone, technological sense, hand painting, etc.)

7. ** Counter-editorial (phrasing)**

8.** Corresponding role lines** (if the camera requires the role to be added, if not left empty)

** Full spectroscopy description in Chinese** (must be clear and can be implemented directly by the team)

10. **Midjourney English Prompt (Key Framet)**

Require:

– The style must be uniform

– Includes light, lens, environment, person, emotion, material, structure, etc

– MUST BE USED DIRECTLY FOR MJ PRODUCTION

– Structural recommendations:

"Scene description + caratter + action + environment + lighting + camera + syle + realism + data"

Attention

– The spectroscopy must be sequenced, non-exhaustive and fully aligned with the content of the interview。

– If a word is long, it can be broken into multiple shots。

– Pictures must be professional, clear and detailed and can be taken directly。

– Midjourney must have a key frame in English and a professional。

As for the spectroscope, I used a more detailed instruction to have GPT play "Professor Director + Spectroscope" and to create a line-to-line spectroscope based on the text. The table contains mirror numbers, suggested time frames, graphic descriptions, mirrors, and, most importantly, English Prompt, which can be thrown directly into MJ's run。

4. Image of person and key frame (live link)

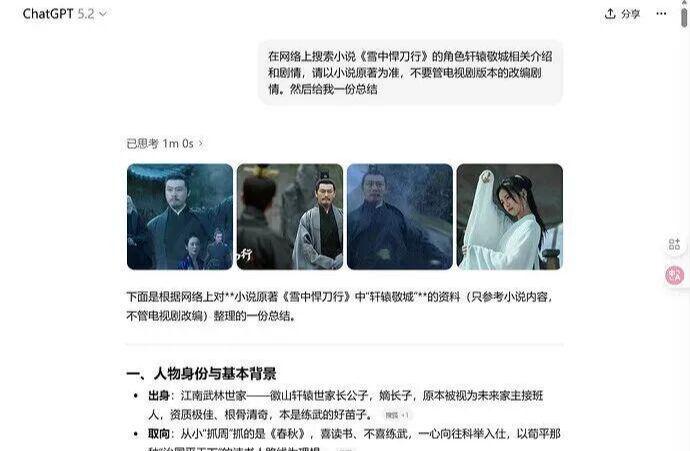

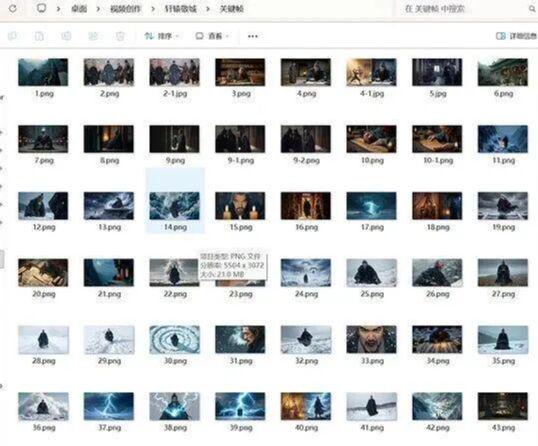

The text is ready and the run is on. The tool is mainly a MidJourney collaboration with Nano Banana Pro。

MJ, I charged 70 bucks for that slot, and this video burned over half the amount. It hurts. Then it turned out that MJ wasn't very good at multi-persons, and I found an API for Nano Banana Pro, and I exported a 4-K picture for about two or three cents, which was a good idea to redraw or create multi-person images。

Note two things:

- the person needs to be overrated to 4k, and the higher the quality of the painting, the better the key frame. the key frame doesn't matter。

- MJ'S NOT VERY GOOD AT MULTI-PERSONS, SO I'VE GOT A LOT OF BIG BANANAS, AND WHEN I'M A LITTLE DISSATISFIED WITH MJ'S PICTURES, I'VE GOT BIG BANANAS。

The effect is as follows:

5. Video clips and audio

Video clip:

With key frames and spectroscopy descriptions, we can generate video. I've actually tried the cloud-deep coven, conch, and Vidu, which actually works better than the locals, but it costs too much, and it costs 5 cents a second. Now that my own computer was working, I chose local deployment instead. I'm using the Wan2.2 I2V 14B Q6_K model, just at the limit of my 12G presence. The rate of generation is about 70 seconds to generate a second video. The specification is 480p 16fps. The last thing I did was run out of 46 lenses, five seconds each。

Audio segment:

The audio is the sound of the oral script. Locally deployed Index-tts models are used。

i found an online data set of 10,000 chinese sounds, one of which was selected: https://www.ttslist.com/

The sound was generated by the fact that I threw the whole text directly into it, which took less time. Index-tts for local deployment. I've got 10,000 Chinese-language big data sets online, and I've picked one。

There's a pit here: it's better to deal with the oral script, and then it's created in segments, so that when it comes to late editing, the speed of speech is better adjusted. It's like I've got a full three-minute voice

6. Editor

THE CLIPS ARE ACTUALLY VERY SIMPLE, AND THEY MATCH AUDIO, SUBTITLES (CLIP A AND MODIFY THEM), VIDEO CLIPS AND BACKGROUND MUSIC. BECAUSE THE TIME OF THE SPECTROSCOPY GENERATED BY AI AND THE ORAL TEXT ARE NOT ALWAYS RIGHT, I AM ADDING OR DELETING THE SPECTROSCOPY WHILE EDITING。

my original material is 480p 16fps, and i intend to process it to 1080p30fps. i don't know。

I was a local RIFE and SeedVR2 model

But here's the pit:

- I'm going to cut it first and think about the frames and the points, but the bottom frame is 24, not 16 of my original material, so it's almost impossible。

- I didn't cut the video, I threw it all into SeedVR2, and I sent a 30fps video, about 200s, over 6,000 frames, and then the model ran for two hours, and the computer was stuck to almost 5,000 frames, and then my heart broke。

So I ended up using a cut-off frame and extra points, not a local deployment. Make sure you fix the material before you cut it

At the end

This is a complete process experience. Although there are many stupid ways in the process and local models have been used to save money, at least the pits have been stepped on. Write it and share it with you。