-

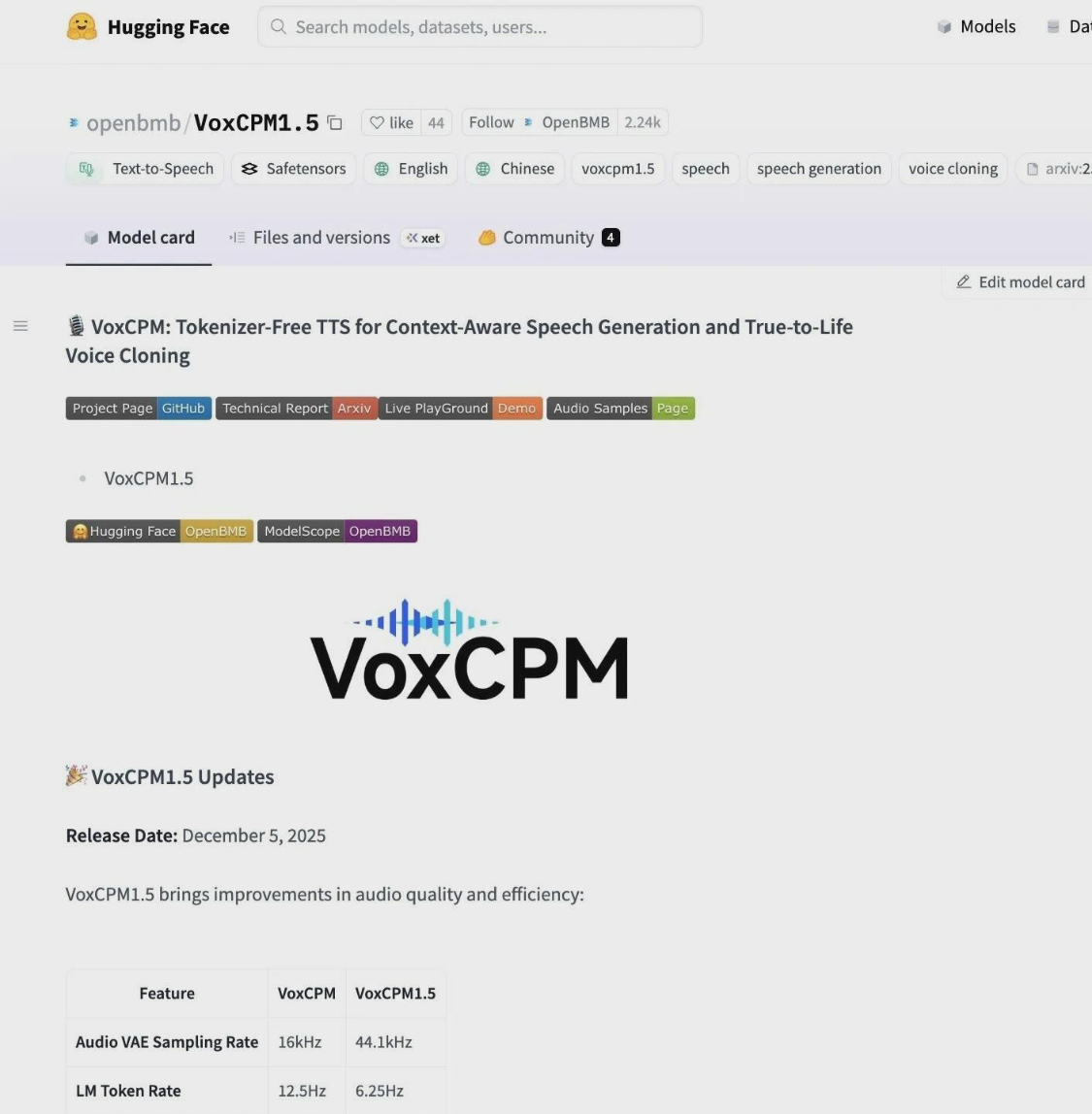

VoxCPM 1.5 Voice generation AI model open source: high-exploited sample audio cloning, double efficiency

On December 11, the Spectator announced that the VoxCPM version 1.5 was officially on line, while continuously optimizing the developer ' s development experience, it also brought about a number of core competency upgrades. VoxCPM is a speech generation base model of 0.5B parameter size, first released in September this year. 1AI with VoxCPM 1.5 Update bright spots: High-exploration Sample Audio Cloning: AudioVAE Sampling Rate raised from 16kHz to 441kHz, with models that make better and more detailed noises based on high-quality audio; ..- 1.2k

-

AutoGLM: Every cell phone becomes "AI"

Yesterday, December 10th, AI announced the Official Open Source AutoGLM project, which aims to promote "AI Agent, a mobile phone," as the public domain of the industry. First complete link operation: On October 25, 2024, AutoGLM was considered the first AI Agent in the world with Phone Use capabilities to achieve a stable full operating link on the real machine. Cloudphones and security design: 2025, AutoGLM 2.0 launched, using MobileRL, Comp..- 3.2k

-

GENRE GLM-4.6V SERIES MULTIMODULAR AI LARGE MODEL RELEASE AND OPEN SOURCE API REDUCTION 50%

Message dated 9 December, AI Commissioner announced and opened a large multi-modular model for the GLM-4.6V series, including: GM-4.6V (106B-A12B): Basic version of cloud- and high-performance cluster scenarios; GM-4.6V-Flash (9B): Light version for local deployment and low-delayed applications. GLM-4.6V raises the context window for training to 128k tokens and achieves the same parameter size in visual understanding precision as SOTA..- 2.7k

-

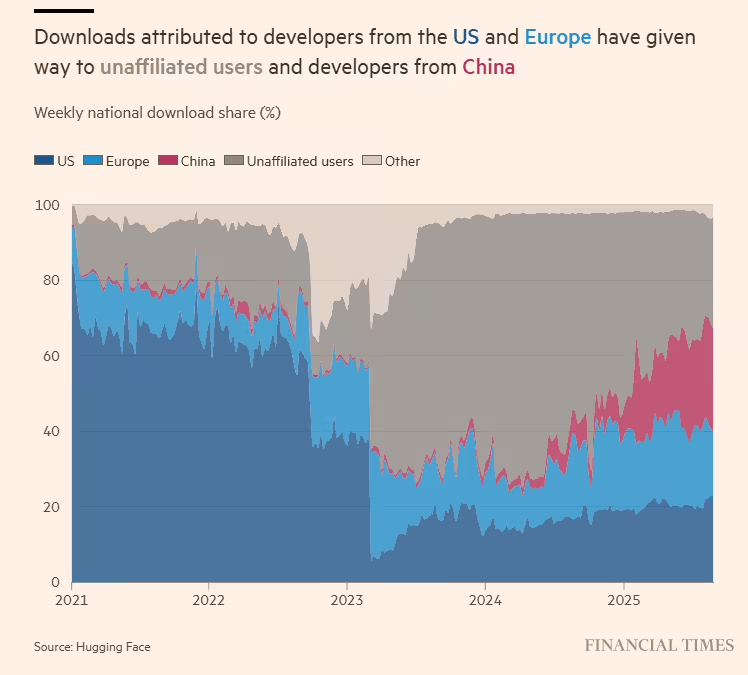

RESEARCH: FOR THE FIRST TIME IN THE PAST YEAR, CHINESE OPEN SOURCE AI MODEL DOWNLOADS SURPASSED THE UNITED STATES

On November 28th, according to the British Financial Times, China has surpassed the United States in the global market for open-source artificial intelligence, i.e., the AI Model, and has gained a key advantage in the global application of this powerful technology. According to reports, a study carried out by the Massachusetts Institute of Technology and Open Source Artificial Intelligence Starter, Hugging Face found that, over the past year, the share of the open source AI model developed by the Chinese scientific team in the global download of open source models had risen to 171 TP3T, more than 15...- 1.9k

-

China's "Closed" in the open source of the AI Model Market is in stark contrast to the "Closed" of OpenAI

On 26 November, the Financial Times reported that China had surpassed the United States in the global “open source” AI market, thus gaining a key advantage in the global application of this powerful technology. A study by the United States MIT and Open Source AI Entrepreneurship, Hugging Face, found that over the past year, China ' s share of newly developed open source models in total downloads of open source models had risen to 17%, surpassing United States companies like Google, Meta and OpenAI by 15.8%. This is the first time that Chinese companies have surpassed their American counterparts in this area. Open..- 2.8k

-

CHAIRMAN OF THE BOARD OF DIRECTORS, PRIVATE LIU: FULL SUPPORT FOR OPEN SOURCE. WE'VE GOT MORE THAN 40 AI MODELS

ON THE MORNING OF NOVEMBER 16TH, AT TODAY'S 2025 A.I.+ CONGRESS, PRIVATE LIU, DIRECTOR GENERAL OF THE GESTAPO, STATED, “THE INTELLECTUAL SPECTRUM HAS ITS OWN ATTACHMENT TO OPEN SOURCES, PREFERS OPEN SOURCES AND SHARING, AT THE PRACTICAL STRATEGIC LEVEL, OPEN SOURCES BENEFIT THE ARTIFICIAL INTELLIGENCE INDUSTRY, AI NEEDS THOUSANDS OF PEOPLE IN ALL FIELDS TO PARTICIPATE, AND BASIC MODELS NEED A LOT OF PEOPLE TO STUDY AND PLAY TOGETHER.” “WE FULLY SUPPORT THE OPEN SOURCE, WHICH NOW HAS MORE THAN 40 MODELS.” PRIVATE LAU SAID THAT. OF COURSE, HE ALSO POINTED OUT THAT THE THINK TANK WAS ALSO CONSIDERING HOW TO EXPLORE COMMERCIAL GAINS ON AN OPEN SOURCE BASIS THROUGH..- 1.6k

-

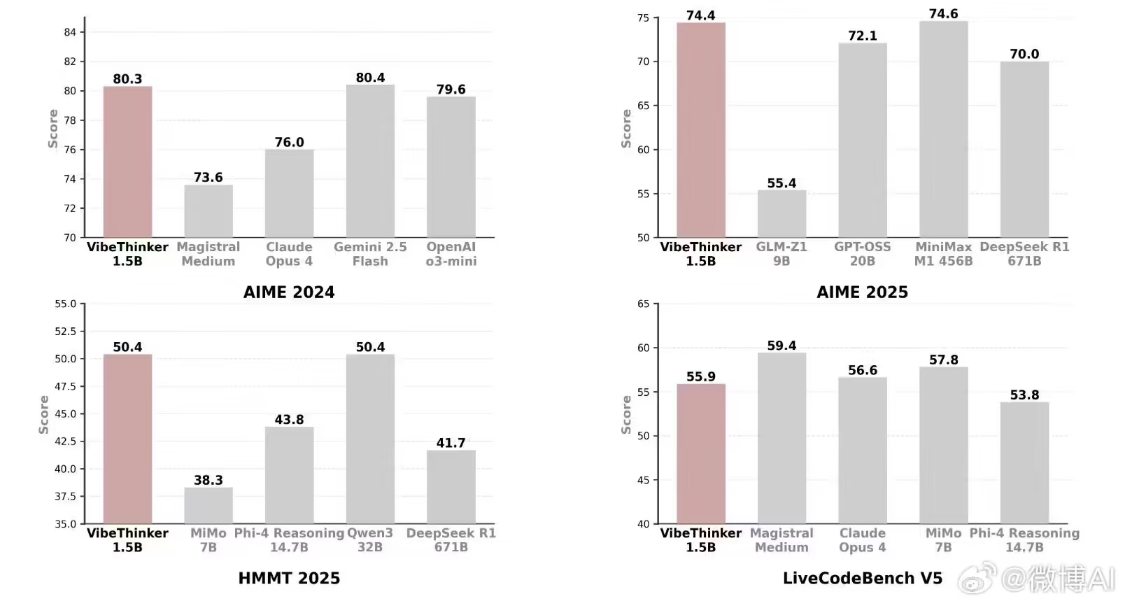

Sina Weibo released its first large open source model, VibeThinker-1.5B, small models challenging a huge parametric rival

On November 14, New Wave Weibo released its first large open source model, VibeThinker-1.5B, called “Small models can also be intelligent”. 1AI is accompanied by the following official presentation: Are the most powerful models in the industry at present more than 1T, or even 2T-scale models, that have a high level of intelligence? Is only a small number of technology giants capable of making large models? VibeThinker-1.5B, which is exactly the negative answer given by Weibo AI to this question, proves that small models can also have high IQs. It's..- 2.6k

-

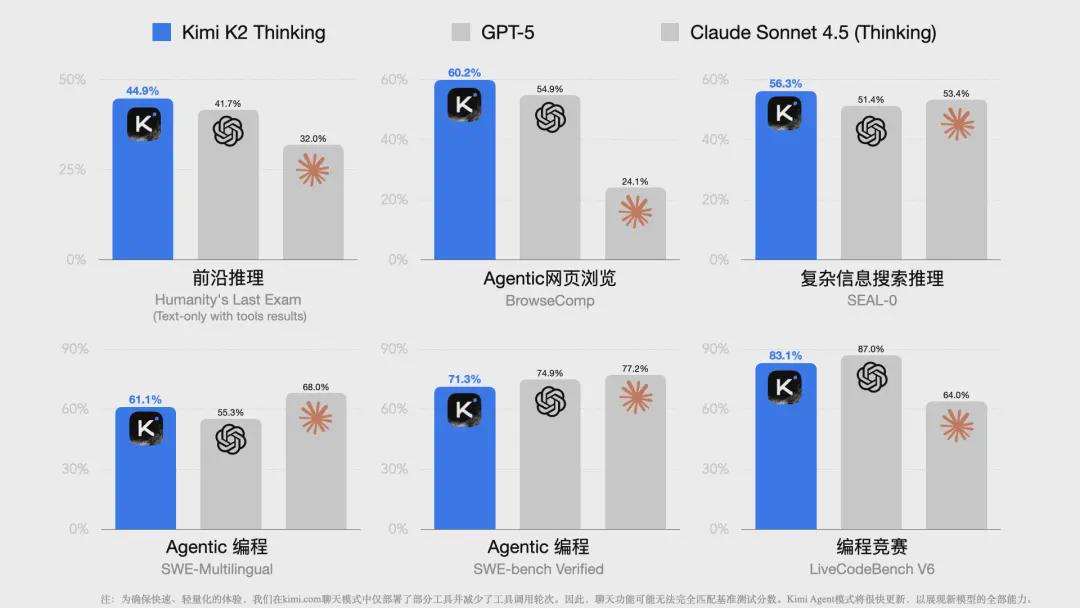

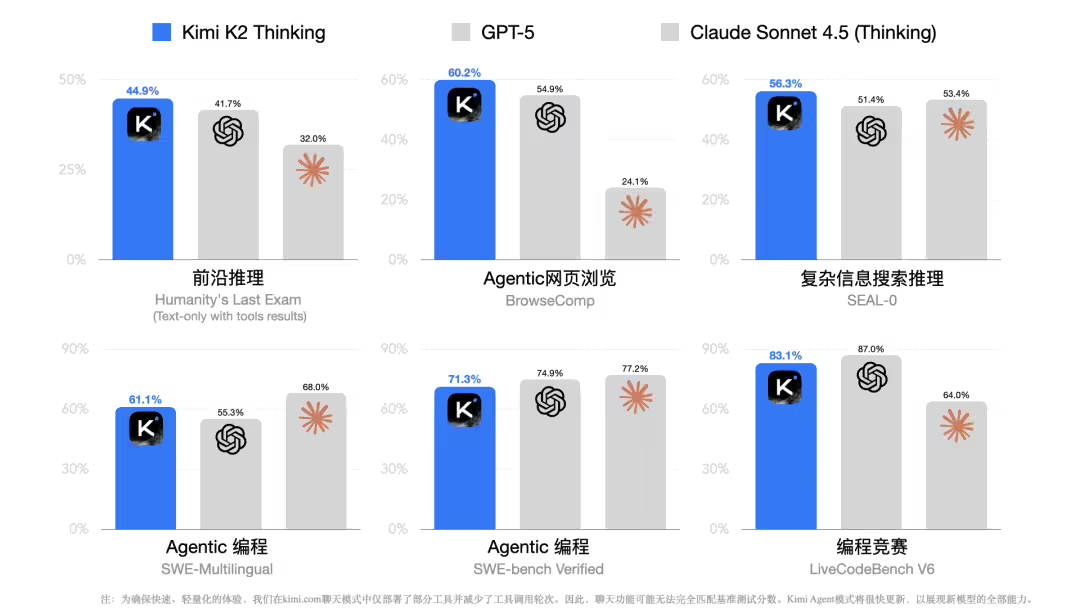

The dark side of the moon Kimi K2 Tinking training costs were exposed to $4.6 million and performance exceeded the OpenAI GPT model of billions of dollars invested

On November 9, Moonshot AI launched its most powerful open-source thinking model so far on Thursday. According to the dark side of the month, Kimi K2 Thinking achieved an excellent performance of 44.9% in the final human examination (HLE), which went beyond advanced models such as GPT-5, Grok-4, Claude 4.5. However, CNBC quotes informed sources that Kimi K2 Tinki..- 2.4k

-

Kimi The most powerful open-source thinking model so far, the dark side of the moon Kimi K2 Thinking

On November 7th, the dark side of the moon launched Kimi's most powerful open-source thinking model - Kimi K2 Thinking. The model was described as a new generation of Tinking Agent, trained in the concept of the "model is Agent" on the dark side of the moon, where originals mastered the ability to "think and use tools". In the final human examination (Humanity & #039; s Last Exam), autonomous web browse Comp, complex information gathering reasoning (SEAL-0)- 2.8k

-

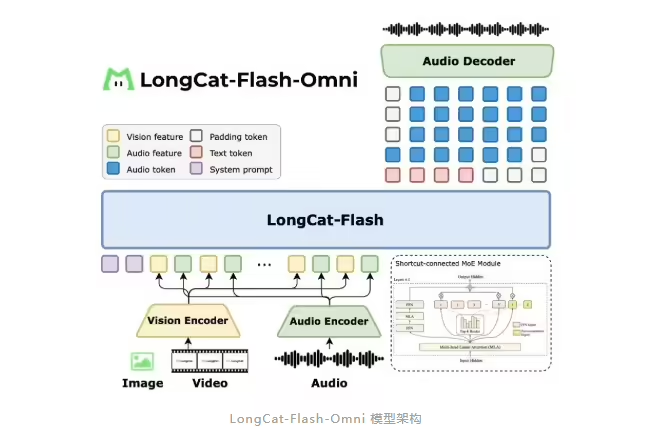

Launch and open-source LongCat-Flash-Omni model: support live video interaction to SOTA level

News from 3 November, on 1 September, the United States officially released the LongCat-Flash series model, which is now available in two versions, LongCat-Flash-Chat and LongCat-Flash-Thinking, and has received the attention of developers. Today, the LongCat-Flash series officially publishes a new family member, LongCat-Flash-Omni. 1AI was informed by the official introduction that LongCat-Flash-Omni was using LongCat..- 2.2k

-

I'll teach you how to deploy a popular video model to make a video of text easily

Ali Baba ' s Pantyan 2.1 (Wan2.1) is now the leading open-source video model that has become an important tool for AI video creation, based on its strong capacity for generation. This paper will teach you how to quickly install and use the Wan2.1 model to make text-generated videos easy. 1 . Environmental readiness, prior to start, ensure that your equipment meets the following requirements: System support: Windows, MacOS hardware requirements: it is recommended to use equipment with NVIDIA graphic cards, it is recommended to display 16 ≥, it is recommended to use RTX 3060 and above to ensure that models..- 3.3k

-

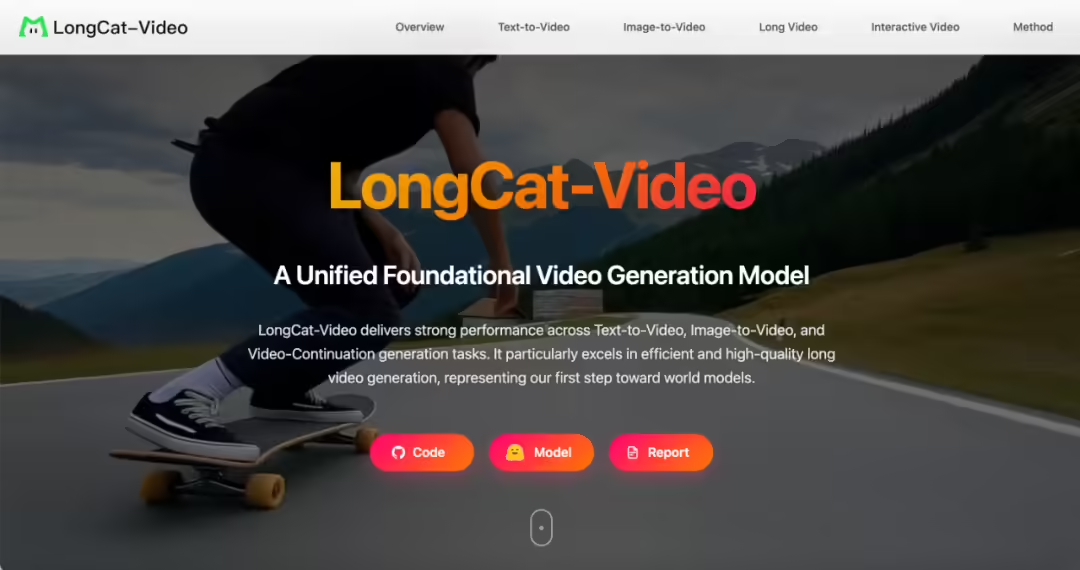

America releases open source LongCat-Video video generation model, which stabilizes 5 minute content

October 27th, this morning, the LongCat team released and opened the LongCat-Video Video Generation Model. According to the official presentation, it achieves open-source SOTA (the most advanced level) with a unified model in the context of the Ventian, Graphical video base mission, and is pre-trained in the use of raw video continuation missions, achieves consistent generation of long-minute video, ensures consistency of time series across frames and soundness of physical motion, and has a significant advantage in the area of long video generation. According to the presentation, in recent years, the World Model has allowed artificial intelligence to truly understand..- 2.1k

-

RELEASE 1.1 AND OPEN SOURCE: A SINGLE CARD CAN BE DEPLOYED TO CREATE A 3D WORLD IN SECONDS

On October 23rd, the Mixer Secretary announced that the first version of the Mixer World Model (WorldMirror) was officially released and opened with new support for multi-views and video input, so that a single card could be deployed and seconds created a 3D world. In July this year, the Queue launched the hybrid world model 1.0, the industry ' s first open-source and traditional CG pipe-compatible tropicable world-generated model, with a lit version that can be deployed in a consumer-level video card. As a former (any-to-any) feedforward 3D reconstruction..- 1.1k

-

Single card day processing 200,000 pages of documents, DeepSeek-OCR open-source

According to news from October 21, the DeepSeek team has recently released a new study, DeepSeek-OCR, which proposes a "text-based optical compression" approach that provides groundbreaking thinking for long text processing for large models. Research shows that by rendering long text into images and then turning to visual token, it is possible to significantly reduce the calculation costs while maintaining high accuracy. Experimental data show that the OCR decoded accuracy rate is as high as 971 TP3T at a rate of less than 10 times; even at 20 times higher, the accuracy rate remains at..- 1.6k

-

Ring-1T-preview, code generation super GPT-5

On September 30th, an ant group announced this early morning the first mega-model of the hundreds of billions of parameters it studied itself, Ring-1T-preview, a large-scale model of natural language reasoning and the first large-scale model of the hundreds of billion parameters of open source reasoning around the globe. Ring-1T-preview is a preview of a mega-mode Ring-1T of trillion parametric reasoning, according to the official information of the Magna Carta Model. Although it was a preview, its natural language reasoning was excellent. In the AIME 25 test, Ring-1T-preview received 92.6 minutes..- 5.2k

-

The DeepSeek-V3.2-Exp model is officially published and is open, and API has significantly reduced prices

On September 29th, DeepSeek officially released today the DeepSeek-V3.2-Exp model, an experimental version. As an intermediate step towards a new generation of structures, the V3.2-Exp introduced DeepSeek Sparse Attention (Note: A Rare Focusing Mechanism) on the basis of V3.1-Terminus, to explore optimization and validation of the training and reasoning efficiency of long texts. DeepSeek Spa..- 1.7k

-

Open source 'Mixage Image 3.0', leading closed source model for the standard industry

Yesterday, the original multi-modular biomode model, "HunyuanImage 3.0" was officially released and opened with parameters up to 80B. It was described as the first open-source industrial-grade multi-modular model, as well as the current open-source model with the strongest effect and the largest amount of parameters, with effect on the head closed-source model of the target industry. Mixtures image 3.0 has been significantly enhanced in semantic understanding, aesthetic sense and reasoning, capable of deciphering the complex semantics of a thousand fonts and producing high-quality images. Unlike the traditional multi-model combination, hybrid image 3.0 uses..- 2.8k

-

If after 10 years, DeepSeek, how to keep China behind America, the answer is open source

According to news reports from the interface on September 28th, on September 27th, the CEO Lee Reacon stated at the Yangtze CEO's 20th anniversary homecoming celebrations that DeepSeek's central contribution to China's AI development was to promote the development of open source ecology. “If, after 10 years, we recall how DeepSeek left China behind the United States, the answer was not its technological capability per se, but it brought about an era of China (the big model).” Lee mentioned again that since DeepSeek's opening, a number of companies in the country have been opening up..- 1.8k

-

Open-source Audio2Face model: AI real-time generation of facial animation, multilingual mouth sync

On September 25th, Young Weida released a book yesterday, September 24th, announcing the Open Source Generation AI Autio2Face, which covers models, software development toolkits (SDK) and a complete training framework, hoping to accelerate the development of AI smart virtual roles in games and 3D applications. By analysing the acoustic characteristics of audio, speech, etc. in the audio, driving virtual role facial actions in real time, the technology produces accurate oral synchronization and natural emotional expressions that can be widely applied in the areas of games, video production and customer services. Audio 2Fa..- 2.1k

-

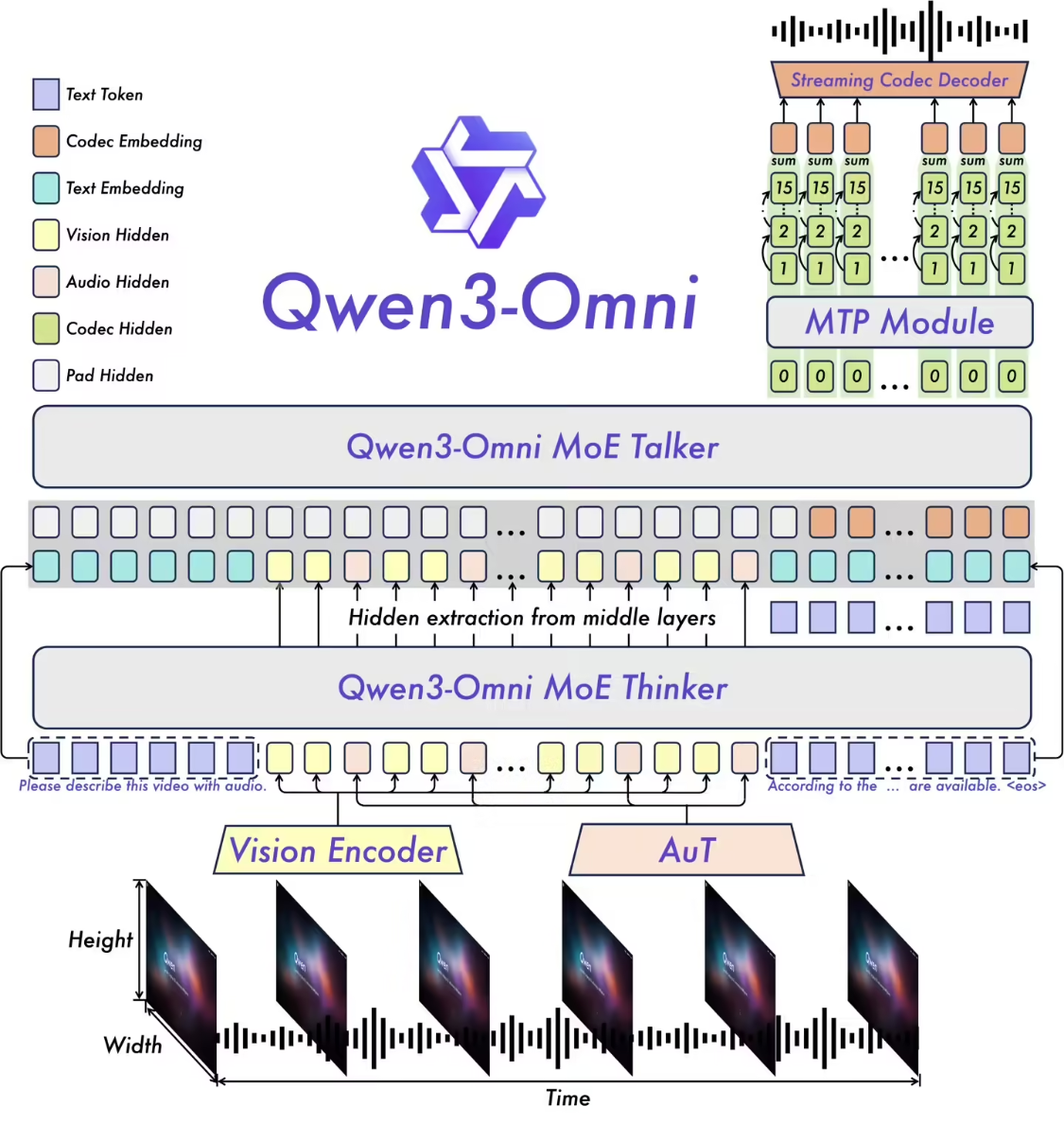

First-to-end full-state AI model Qwen3-Omni release open source, text, image, audio and video, all harmonized

On September 23rd, another familiar late night, Aliun released and opened a new Qwen3-Omni, Qwen3-TTS and Qwen-Image-Edit-2509 for Google Nano Banana. Qwen3-Omni is the first original-to-end full-state AI model of the industry that can process multiple types of input for text, images, audio and video, and resolves the long-standing need for multi-modular models to move between different capabilities through text and natural voice real-time output..- 2.7k

-

Upload a map, lead any video, "Model for the most powerful action" Alithongyan Wan2.2-Animate Open Source

On September 20th, Alitun Yiwan, a new action generation model, Wan2.2-Animate, officially opened. The model supports drivers, animation images and animal photographs and can be applied in areas such as short video creation, dance template generation and animation production. The Wan2.2-Animate model is based on animate Anyone, which was previously an open-source model, which is not only significantly upgraded in terms of the consistency of people, the quality of their generation, but also supports both action mimics and role-playing patterns: role imitation: input a role picture and one..- 1.6k

-

The first primary-to-end mega-speech model of Mimo-Audio, with a natural, interactive and humanist dialogue

On September 19, Mi announced today that Xiaomi-MiMo-Audio, the first original-to-end voice model of open source, had for the first time achieved a generalization of ICL-based samples in the field of voice. According to Mi, five years ago GPT-3 demonstrated for the first time the ability to acquire In-Context Learning (ICL, context learning) through a self-returning language model + large-scale unmarked data training, while in the area of voice, the existing large-scale model still relies heavily on large-scale tagging data and is difficult to adapt to new assignments to human intelligence. And Xia..- 1.6k

-

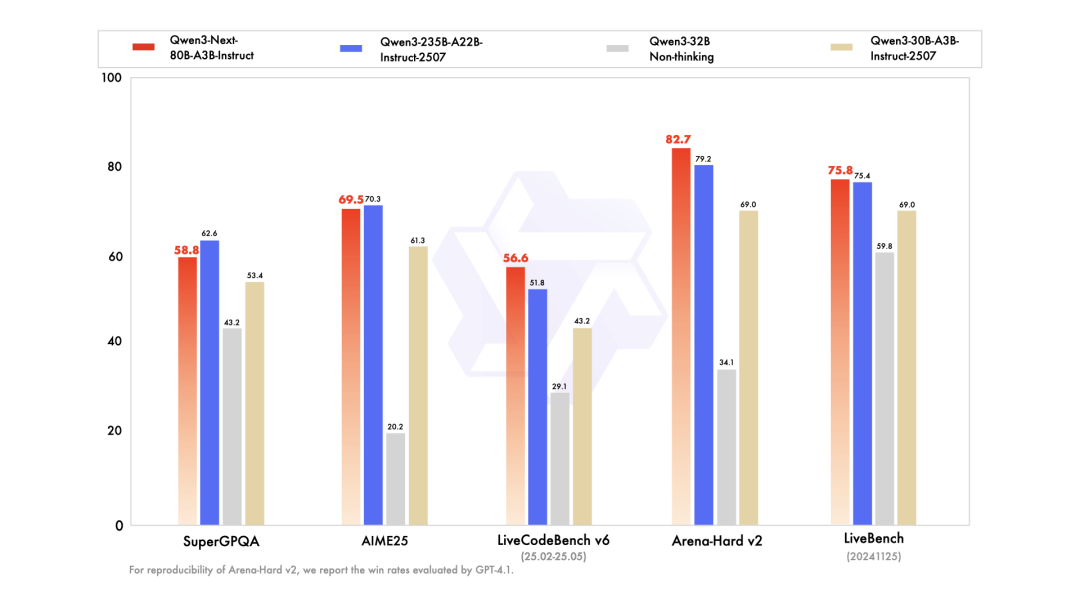

Aliyun release generic Qwen3-Next basic model structure, open source 80B-A3B series model

In the early hours of the morning, Alithong released the next generation of basic model structures, Qwen3-Next, and opened up the Qwen3-Next-80B-A3B series based on the architecture. According to official sources, it is believed that Context Length Scaling and Total Parameter Scaling are two major trends in the development of large models in the future. In order to further enhance the training and reasoning efficiency of the model under long context and large-scale general parameters, a new Qwen3-Next model structure was designed. I don't know- 2.7k

-

Tencent Hunyuan Image Model 2.1 Released as Open-Source: Native 2K Image Generation with Support for Chinese and English Input

On September 10, the news announced yesterday that the hybrid image model was a new open source of 2.1 in support of original 2K and original Chinese and English input. The Quest was also synchronized with the "PromptEnhancer Text Rewriting Model" and the entry of "Putting a Cute Cat" will automatically complete "Orange Short Cats on a Grid Table with cookies on their paws, water-colored wind" and support for a two-way conversion in Central English, with the words "Starcake with Dream" in Chinese, so that it can also be presented with precision and avoid "expressive ambiguity". Mixed Image Model 2.1 Supporting 1k tokens..

❯

Search

Scan to open current page

Top

Checking in, please wait

Click for today's check-in bonus!

You have earned {{mission.data.mission.credit}} points today!

My Coupons

-

¥CouponsLimitation of useExpired and UnavailableLimitation of use

before

Limitation of usePermanently validCoupon ID:×Available for the following products: Available for the following products categories: Unrestricted use:Available for all products and product types

No coupons available!

Unverify

Daily tasks completed: